I wrote a blog post on the fragmented data pipeline that is causing us all to be confused about coronavirus.

Data quality doesn& #39;t get as much attention as modeling, but it is usually as important if not more important. (1/16) https://chris-said.io/2020/04/14/fragmented-data-pipelines/">https://chris-said.io/2020/04/1...

Data quality doesn& #39;t get as much attention as modeling, but it is usually as important if not more important. (1/16) https://chris-said.io/2020/04/14/fragmented-data-pipelines/">https://chris-said.io/2020/04/1...

In the post, I discuss some constitutional reasons why the data is bad (hint: Tenth Amendment), and some of the ways by which we can make things better.

But first, some background:

None of the data makes any sense. The UK has a daily case count 60 times higher than Australia. Italy has a case fatality rate 12 times higher than Pakistan.

None of the data makes any sense. The UK has a daily case count 60 times higher than Australia. Italy has a case fatality rate 12 times higher than Pakistan.

Normally, heterogeneity of this sort could be an enormously useful way to teach us about the disease.

But unfortunately, it is hard to understand the data when some countries test at 10x-100x the rates of other countries, and then don& #39;t report their testing rates.

But unfortunately, it is hard to understand the data when some countries test at 10x-100x the rates of other countries, and then don& #39;t report their testing rates.

It& #39;s totally understandable that some countries would test more than others.

But what& #39;s not ok is that so many countries don& #39;t report how much they test, who they test, or even what counts as a test!

But what& #39;s not ok is that so many countries don& #39;t report how much they test, who they test, or even what counts as a test!

Just as there is incomplete and unstandardized reporting among countries, there is incomplete and unstandardized reporting among US states.

Even among economically advanced states like California and New York.

Even among economically advanced states like California and New York.

As a result of all of this, our infection count estimates could be 10x to 100x off, depending on the country, and we are missing out on invaluable opportunity to learn about the regional and behavioral changes that affect disease spread.

None of this is groundbreaking stuff. Epidemiologists are keenly aware of the limitations of crude infection counts.

And government civil servants are all concerned about the reporting issues, and working hard to address them.

And government civil servants are all concerned about the reporting issues, and working hard to address them.

This isn’t a knowledge problem. Instead, this is caused by some combination of three factors:

Reason #1. Models get more attention that upstream data issues.

Reason #2: Fragmented global infrastructure + Tenth Amendment

So this bring me to the crux of my post.

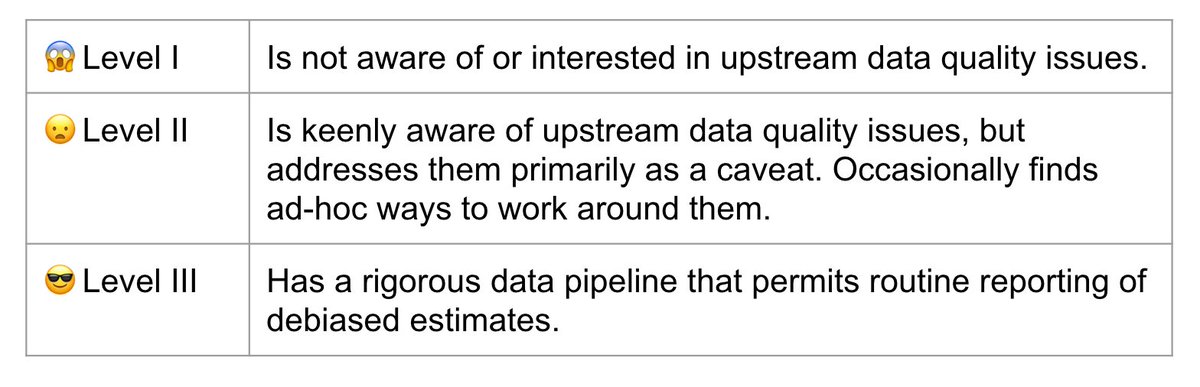

In my field of data science, you can determine the maturity of data organizations by their outlook on upstream data quality issues. There are roughly three levels. Read this carefully.

In my field of data science, you can determine the maturity of data organizations by their outlook on upstream data quality issues. There are roughly three levels. Read this carefully.

Our public health infrastructure is at Level II.

My strong claim, and I mean this in as constructive a way as possible, is that Level II is unacceptable. We need to be at Level III before the next pandemic hits.

My strong claim, and I mean this in as constructive a way as possible, is that Level II is unacceptable. We need to be at Level III before the next pandemic hits.

How do we get there? My post goes into more detail but a few ideas that come to mind are financial incentives via the ELC Cooperative Agreement, assurance contracts for international cooperation, cultural shifts, and funding to make this all happen. https://chris-said.io/2020/04/14/fragmented-data-pipelines/">https://chris-said.io/2020/04/1...

^ I have reached out to several policy experts about the feasibility of these ideas. If you know anybody who has expertise here, I would be very interested to hear their views

(16/16)

(16/16)

Read on Twitter

Read on Twitter