Our work & #39;Systematic generalization emerges in Seq2Seq models with variability in data& #39; published at #ICLR2020 workshop Bridging Cognitive Science and AI.

We show that LSTM+attn model can exhibit sys.gen. and analyze it& #39;s behavior when it does. https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Down pointing backhand index" aria-label="Emoji: Down pointing backhand index">thread.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Down pointing backhand index" aria-label="Emoji: Down pointing backhand index">thread.

https://baicsworkshop.github.io/papers/BAICS_11.pdf">https://baicsworkshop.github.io/papers/BA...

We show that LSTM+attn model can exhibit sys.gen. and analyze it& #39;s behavior when it does.

https://baicsworkshop.github.io/papers/BAICS_11.pdf">https://baicsworkshop.github.io/papers/BA...

Fodor et. al. 1988 argues that language is systematic. That if we understand & #39;John loves Mary& #39;, we also understand & #39;Mary loves John& #39; as the underlying concepts to process both the sentences (in fact, anything in the form NP Vt NP) are the same.

Fodor argues that as language is systematic and thought/cognition is like a language (language of thought, 1975), connectionist approaches can& #39;t model cognition. But classical approaches (Symbolic AI) are cool wrt this as they have combinatorial syntax & semantics by design.

Systematicity, seen as an aspect of generalization, argues that a model should understand all the data points containing the same concepts if it understands any one of them. Going further, after learning N concepts, a model should generalize to new combinations of those concepts.

@LakeBrenden et. al. 2017& #39;s SCAN dataset evaluates sys. gen. of a seq2seq model trained on pairs (& #39;walk& #39;, & #39;WALK& #39;), (& #39;walk twice& #39;, & #39;WALK WALK& #39;) and (& #39;jump& #39;, & #39;JUMP& #39;) with pairs like (& #39;jump twice& #39;, & #39;JUMP JUMP& #39;) as they are just a new combination of already learned concepts.

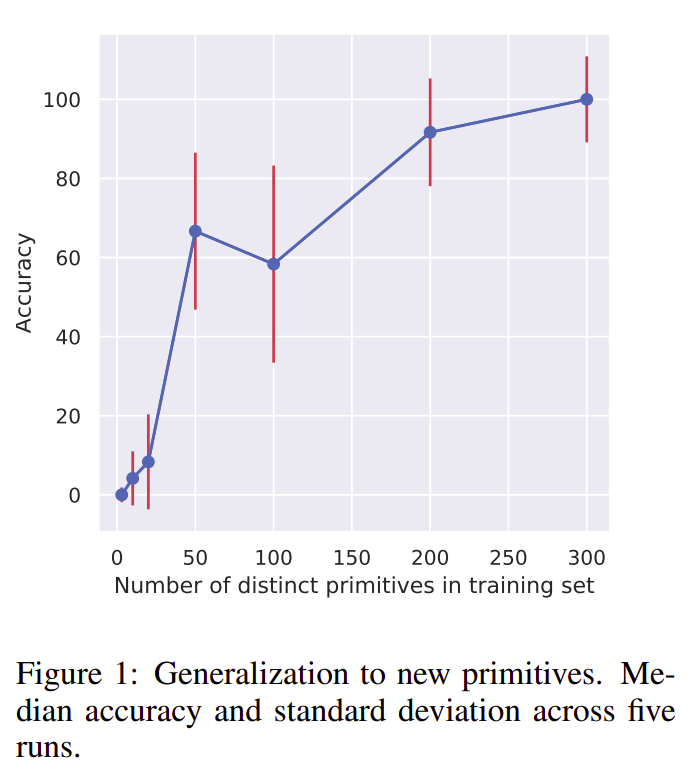

@FelixHill84 @santoroAI et. al. 2019 showed that an RL agent systematically generalizes to a new object if a command like & #39;pick& #39; is trained with more objects. i.e. generalies to & #39;pick NewObj& #39; after trained on & #39;pick Obj1& #39;, & #39;pick Obj2& #39;... & #39;pick ObjN& #39;, where N is cool wrt task.

Knowing the above work it is fairly a no-brainer to see whether a seq2seq model exhibits systematic generalization solving (& #39;jump twice& #39;, & #39;JUMP JUMP& #39;) after trained on a pretty-good-N number of different primitives like & #39;walk1 twice& #39;, & #39;walk2 twice& #39; ... & #39;walkN twice& #39;.

Turns out, just an LSTM+attn learns 6 modifiers in SCAN (out of 8 modifiers & 2 conjunctions) with an increased number of distinct primitives in the dataset and generalizes to commands with new primitives never trained with any modifiers like (& #39;jump twice& #39;, & #39;JUMP JUMP& #39;).

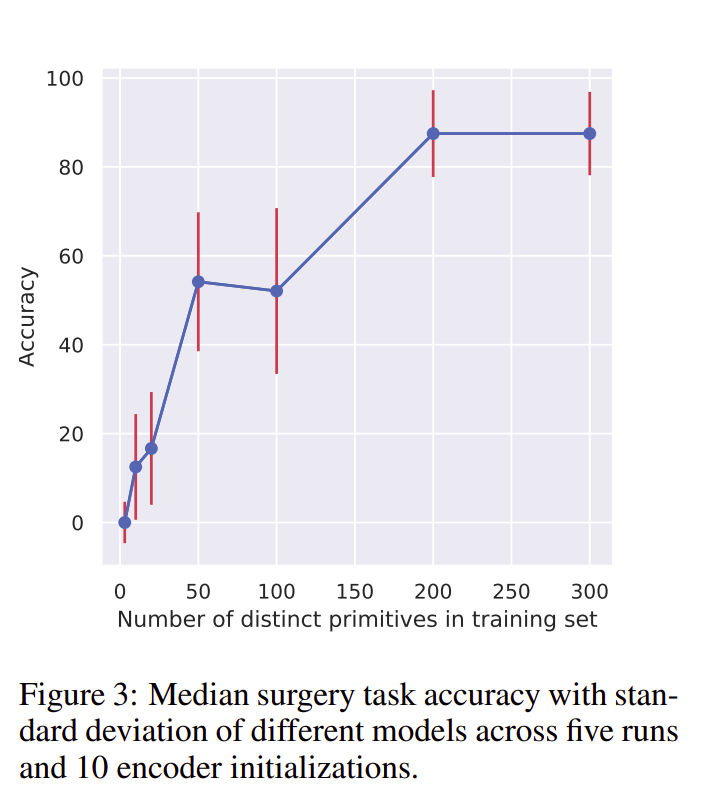

We found a behavior that is highly correlated with sys. gen. i.e. if we subtract & #39;walk& #39; from the command & #39;walk twice& #39; and add & #39;jump& #39; the model gives the output of & #39;jump twice& #39;. Vectors added/subtracted are from the encoder. This behavior and sys. gen. has 0.99 pearson coeff.

Insight: More num. of primitives a modifier is operated on gives rise to sys. gen. and also makes the model represent a modifier independent of any instance of variable it operated on. Instance Independent representations for modifiers. And it is highly correlated with sys. gen.

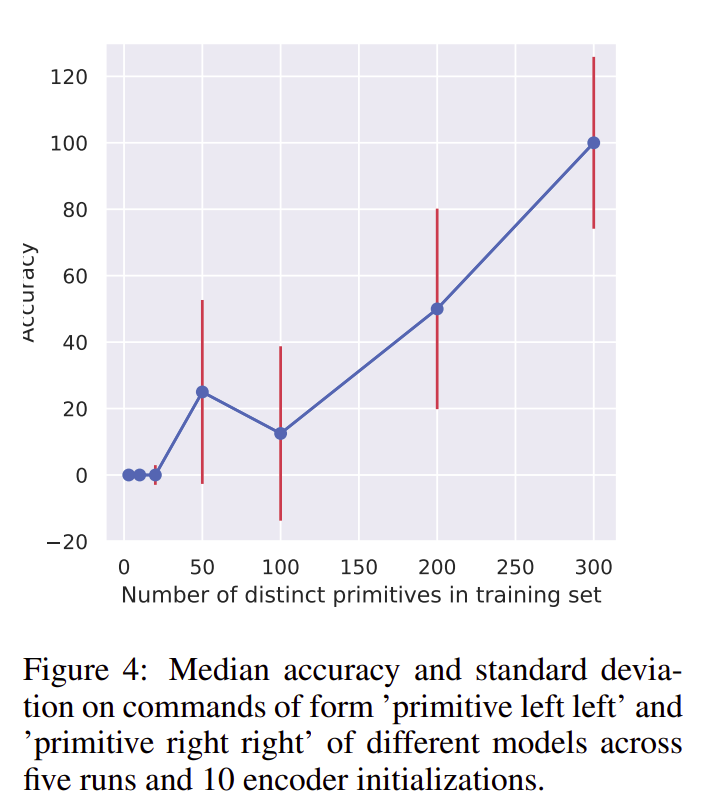

Models trained on prim. variables & #39;jump twice& #39; generalized to compound variables & #39;{jump twice} twice& #39;, though in a limited way. Syntactic attn and meta-seq2seq learning which solved SCAN didn& #39;t show this behavior, more interestingly, even when trained with 300 diff. primitives.

As you come this far into this thread, I really want to talk to you and know your comments on this simple, but interesting (really?) work.

Also, Check out all the work on SCAN( @LakeBrenden ), CLOSURE( @DBahdanau ), and gSCAN ( @jacobandreas, @LauraRuis7 ) datasets.

Also, Check out all the work on SCAN( @LakeBrenden ), CLOSURE( @DBahdanau ), and gSCAN ( @jacobandreas, @LauraRuis7 ) datasets.

Finally, I would like to thank my pal @DMohith_ for great discussions.

* I somehow think that @tallinzen is the one who reviewed (which was double-blinded) this work and gave a green signal. Nonetheless, I am inspired and thankful for his opinions/thoughts/papers.

* I somehow think that @tallinzen is the one who reviewed (which was double-blinded) this work and gave a green signal. Nonetheless, I am inspired and thankful for his opinions/thoughts/papers.

Read on Twitter

Read on Twitter