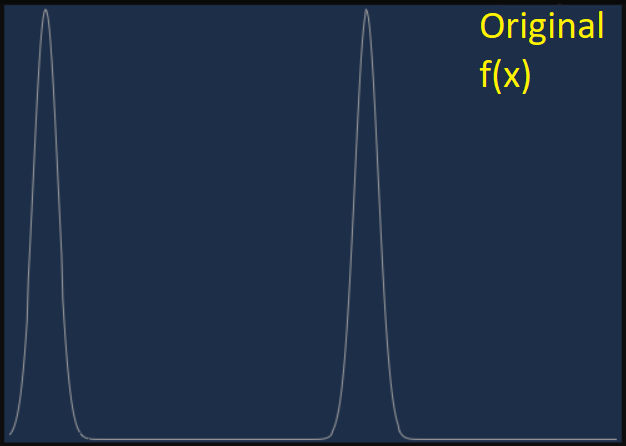

A mini Importance Sampling adventure: imagine a signal that we need to integrate (sum it& #39;s samples) over its domain. It could for example be an environment map convolution for diffuse lighting (1/6).

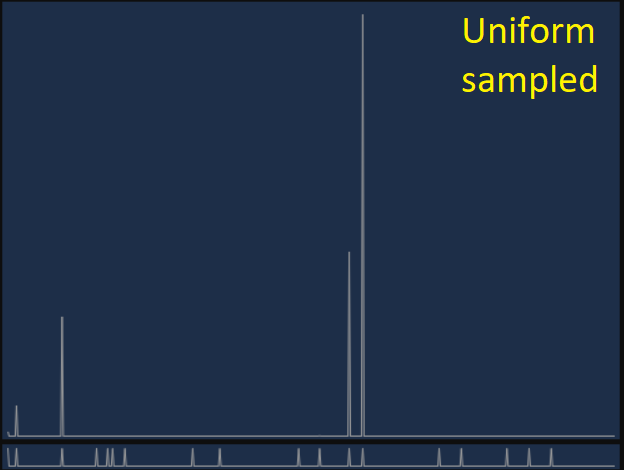

Capturing and processing many samples is expensive so we often randomly select a few and sum these only. If we uniformly (with same probability) select which samples to use though we risk missing important features in the signal, eg areas with large radiance (2/6).

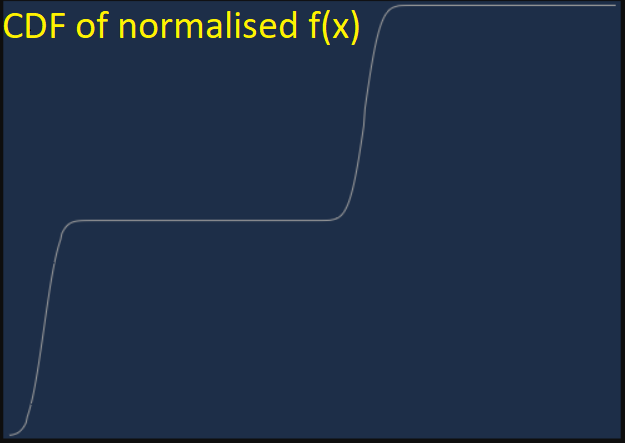

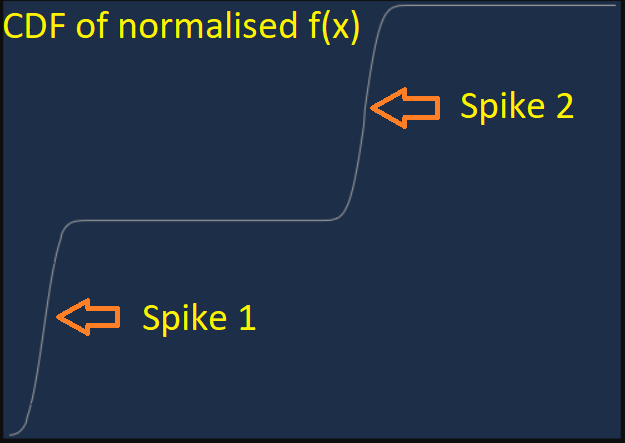

If the signal are non negative (like an image), we can normalise its values (divide by the sum of all values) and treat it as a probability density function (pdf). Using this, we can calculate the cumulative distribution function (CDF) (3/6).

The value of a CDF at position X is the sum of the pdf values up to that point. The CDF has some nice properties, it is always increasing up to a max of 1. Also big changes in the original signal appear as steep slopes, while slow/small changing areas are flatter (4/6).

This means that if we uniformly select random Y values on the vertical axis and project them horizontally (X axis) til they meet the curve, larger features in the signal will receive more samples, while small ones, where the CDF curve is flatter, will receive less (5/6).

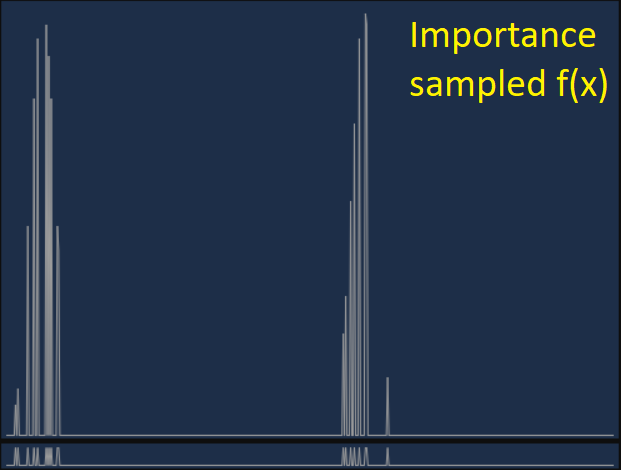

Finally, if we use those X positions to sample the original signal, most samples will fall on the large features of the signal, and won& #39;t be wasted, allowing us to represent the original signal better. (6/6).

Read on Twitter

Read on Twitter