Are standard epidemiological models useful for decision-making in a real epidemic in real time? We have been pondering this question recently at @LdnMathLab. Here are some (not too technical) thoughts.

There are two types of epidemiological model. Compartmental models, like SIR, divide the population into different disease states and model flows between the states. Agent-based models simulate potentially transmissive interactions between virtual individuals.

They all have one thing in common. They attempt to model the full course of an epidemic, from first infection to final state. Let& #39;s call them "full models".

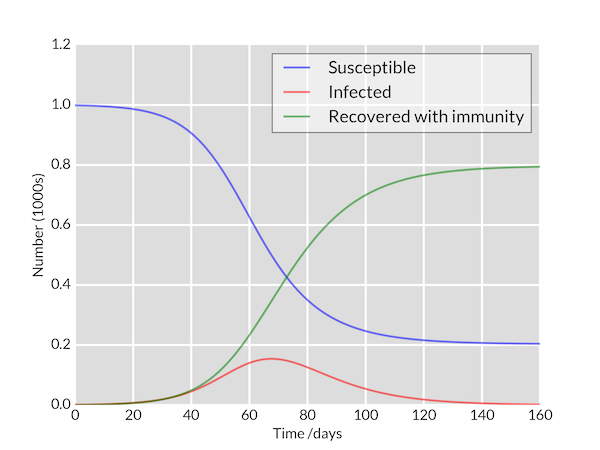

A full model predicts an S-shaped curve for completed infections over time (green line, credit @scipython3). This makes intuitive sense. Initially the disease spreads rapidly through a susceptible population. Transmission slows and eventually stops as more people get infected.

In the SIR model, this S-shaped curve is the logistic function. At early times, it& #39;s almost identical to the exponential function. That& #39;s a nice sanity check, because data and reasoning tell us that cases should grow multiplicatively in a naive population. https://en.wikipedia.org/wiki/Logistic_function">https://en.wikipedia.org/wiki/Logi...

This function has three parameters. One is the initial exponential growth rate. Another is the time of the middle of the S, which coincides roughly with the peak in active infections. And another is the upper asymptote, or final total infections. All are useful.

We can estimate parameter values in two ways. Either by fitting them to data of numbers of infections. Or, if we have a mechanistic model which relates parameters to biological observables, we can measure those instead. Let& #39;s focus on the first option.

Here& #39;s the problem. Early in an outbreak, we have data from the exponential growth phase only. If we fit a full model to such data, we have huge uncertainties in the middle and maximum of the S. That& #39;s common sense: it& #39;s hard to fit parts of a curve that have no data.

Parameter estimates for the later parts of the curve are determined by noise. In other words, they are unstable. As @DaviFaranda has shown, predictions of final total infections can fluctuate by orders of magnitude as each data point arrives. https://twitter.com/DaviFaranda/status/1239937776734306304">https://twitter.com/DaviFaran...

Here is the (very) recent paper by @DaviFaranda, @isaacpc1975, @hulme_oliver, Aglaé Jezequel, Jeroen Lamb, Yuzuru Sato, @H4wkm0th. https://twitter.com/DaviFaranda/status/1242839845250228236">https://twitter.com/DaviFaran...

Unless we have good ways to estimate these parameters from other measurements, we just have to accept that we cannot know them with much certainty at the start of an outbreak. That& #39;s annoying, because this is exactly the moment when our decisions have most impact.

So what can we know early on? Answer: the exponential growth rate or, equivalently, the doubling time. We can estimate this parameter reliably, because all our data comes from the period where model behaviour is governed by it.

The doubling time is extremely useful. Assuming our health service is not already designed for a pandemic, it will tell us pretty accurately when our hospitals will be overwhelmed and, therefore, how much time we have to act.

But we can get the doubling time by fitting a simple exponential function to cumulative cases. It& #39;s as easy as finding the slope of a straight line on a log-linear plot. So why do we need the full model early on? That& #39;s a good question. https://twitter.com/ole_b_peters/status/1242065374839017474">https://twitter.com/ole_b_pet...

Of course, a simple exponential fit will fail eventually, e.g. when it predicts 43 trillion cases by October. But we hope to have enough data from all parts of the S to calibrate a full model by then.

A full model may be good at retrospectively explaining the phases of an epidemic, when all the data are in. And it may be good at predicting peak and final total infections, once a significant fraction of the population has been infected.

But, when fitting to case data, the S-shaped model tells us no more than the exponential model in the early phase of an epidemic, precisely because the two models are indistinguishable at early times. The later parts of the S are pure guesswork.

Indeed, using a full model at the start of an outbreak may be harmful. Parts of the prediction are hugely uncertain. Complexity limits intuition and can stop us sanity checking. It& #39;s easy to trust a big black box that worked well when we ran it on the Spanish Flu.

Remember, early on is when we have the greatest power to alter the course of events. At the very least, we should be running simple and robust models, like exponential growth, in parallel with the fancy stuff. Think of it as insurance.

When all of this is over, there& #39;s a legitimate discussion to be had about how and when standard epidemiological models are useful in real-time evolving outbreaks. Let& #39;s not forget to have that discussion.

But for now let& #39;s get better at collecting data, so that when the state-of-the-art models come into their own later in the pandemic, we have what we need to calibrate them reliably and make useful predictions. And let& #39;s all #StayAtHome  https://abs.twimg.com/hashflags... draggable="false" alt="">.

https://abs.twimg.com/hashflags... draggable="false" alt="">.

Read on Twitter

Read on Twitter