Got a lot of angry replies to this one so here& #39;s a more in-depth thread about why the Behavioural Insights Team irritates me so much... https://twitter.com/sophie_e_hill/status/1238400198155649029">https://twitter.com/sophie_e_...

e.g. their "menu for change" project, which they say is based on "the latest and most well-evidenced behavioural science" cites Brian Wansink 6 times!! https://www.bi.team/publications/a-menu-for-change/">https://www.bi.team/publicati...

Brian Wansink is a fraud. He literally fabricates data.

And this has been widely reported on since 2017!

https://www.chronicle.com/article/Spoiled-Science/239529

https://www.chronicle.com/article/S... href=" https://slate.com/technology/2017/02/stop-getting-diet-advice-from-the-news.html

It">https://slate.com/technolog... is deeply irresponsible for the Nudge Unit to continue to promote fraudulent research.

And this has been widely reported on since 2017!

https://www.chronicle.com/article/Spoiled-Science/239529

It">https://slate.com/technolog... is deeply irresponsible for the Nudge Unit to continue to promote fraudulent research.

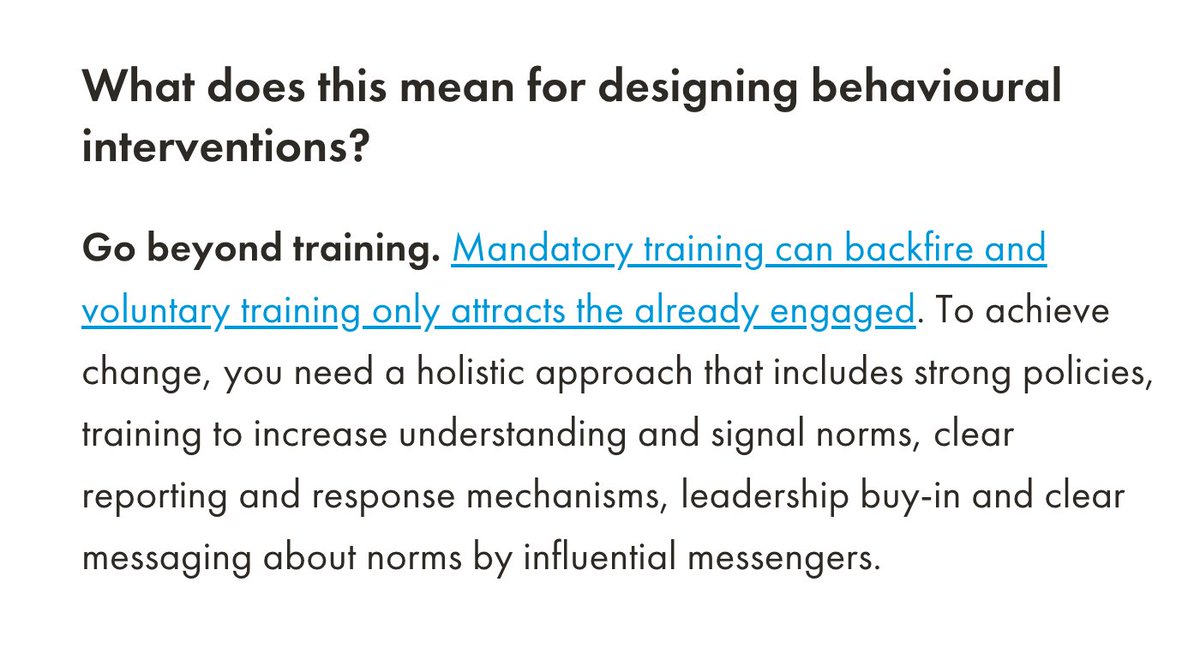

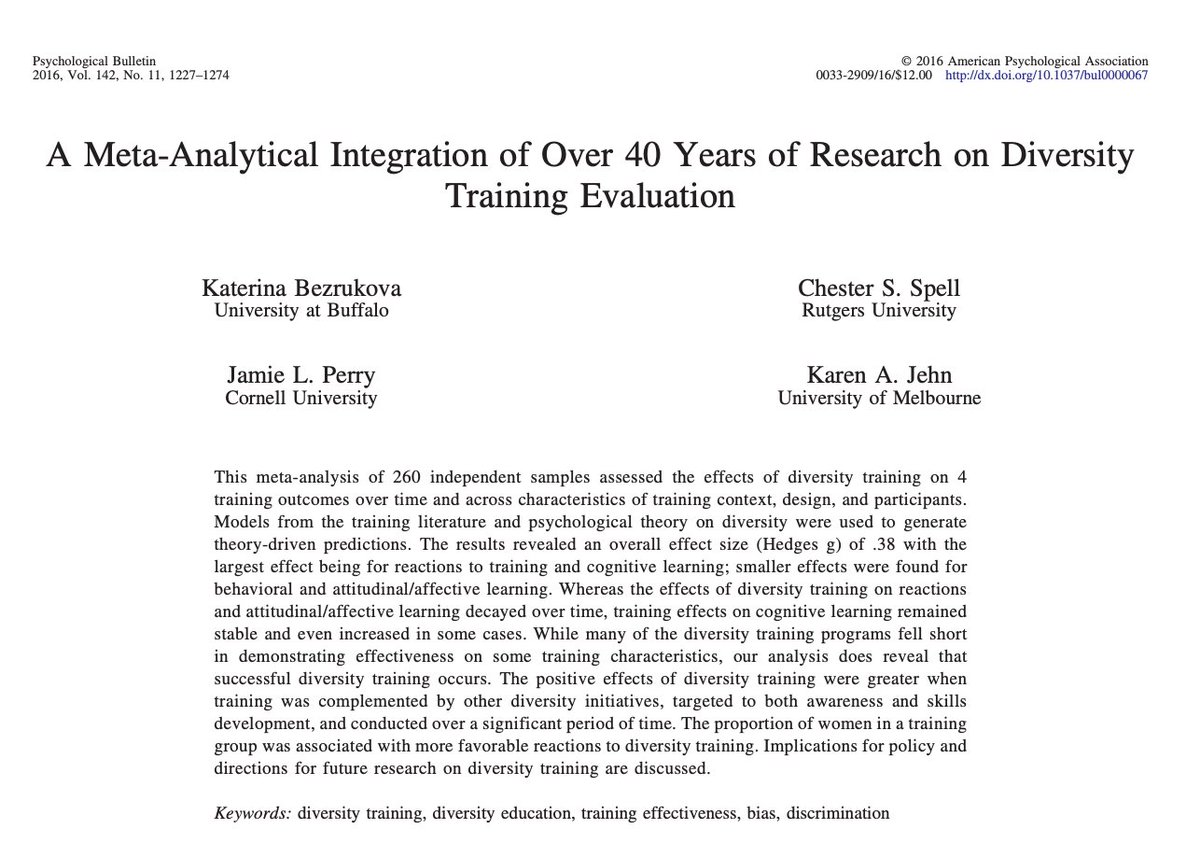

Here& #39;s a blog post where they claim that mandatory sexual harassment training can backfire, with a link to this meta-analysis:

https://www.bi.team/blogs/more-than-a-few-bad-apples-what-behavioural-science-tells-us-about-reducing-sexual-harassment/">https://www.bi.team/blogs/mor...

So, unlike the Nudge Unit, I actually read this paper.

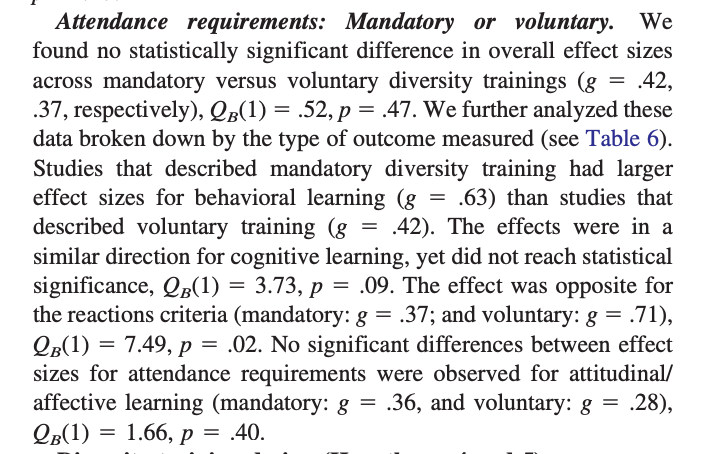

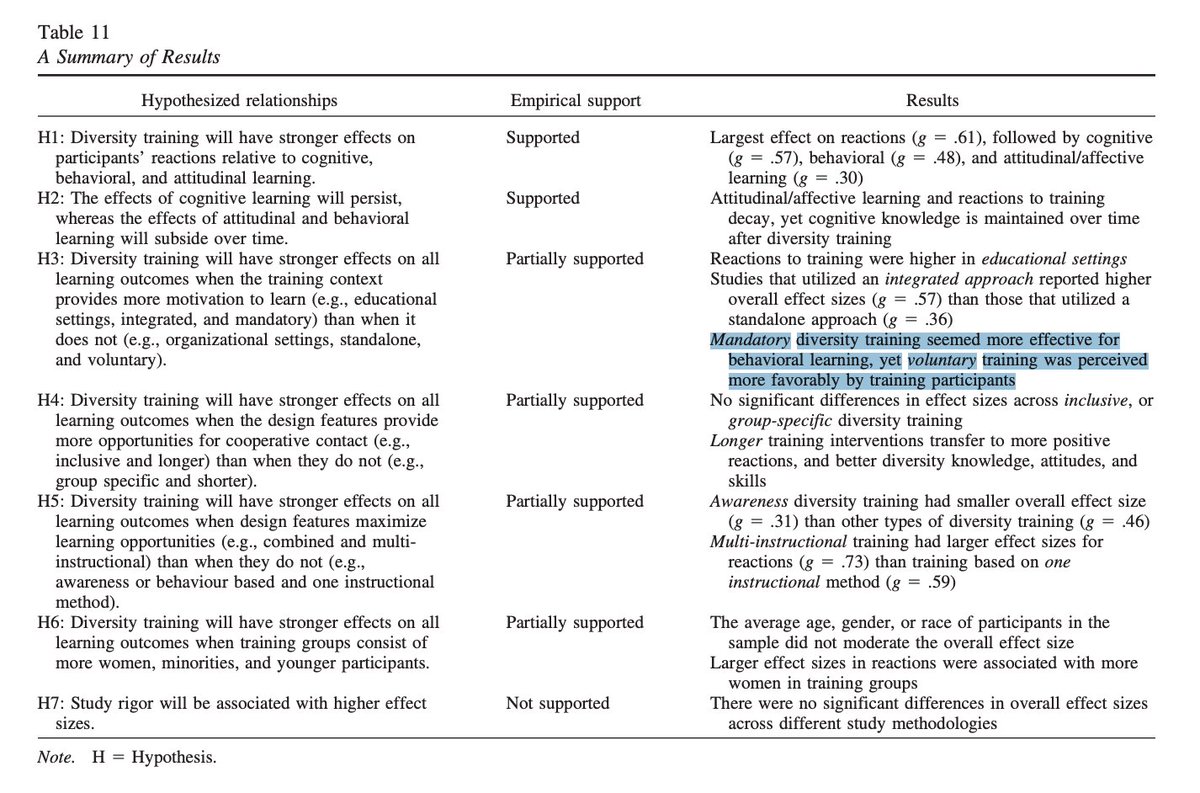

It finds:

-mandatory trainings are more effective at changing behaviour

-mandatory trainings produce positive reactions, though voluntary trainings produce even MORE positive reactions

That& #39;s not the same as a backlash!!?

It finds:

-mandatory trainings are more effective at changing behaviour

-mandatory trainings produce positive reactions, though voluntary trainings produce even MORE positive reactions

That& #39;s not the same as a backlash!!?

P.S. Of course, there is some research out there that argues for the "backlash" effect, though it was not cited by the Nudge Unit here and I think it& #39;s deeply flawed for other reasons! https://twitter.com/sophie_e_hill/status/1201610408362156032?s=20">https://twitter.com/sophie_e_...

For example, here& #39;s a recent paper published on their interventions on how UK jobseekers benefit is administered.

David Halpern has frequently cited this work as the work he is most proud of.

https://www.cambridge.org/core/journals/journal-of-public-policy/article/behavioural-insight-and-the-labour-market-evidence-from-a-pilot-study-and-a-large-steppedwedge-controlled-trial/F953A370AD7D8A0427FF0772098D7C74">https://www.cambridge.org/core/jour...

David Halpern has frequently cited this work as the work he is most proud of.

https://www.cambridge.org/core/journals/journal-of-public-policy/article/behavioural-insight-and-the-labour-market-evidence-from-a-pilot-study-and-a-large-steppedwedge-controlled-trial/F953A370AD7D8A0427FF0772098D7C74">https://www.cambridge.org/core/jour...

The goal of this intervention is to increase "off-flow". Basically to try to get people to stop claiming jobseekers benefit as quickly as possible.

(Note: they are not measuring transitions into work, just when people stop claiming the benefit...)

(Note: they are not measuring transitions into work, just when people stop claiming the benefit...)

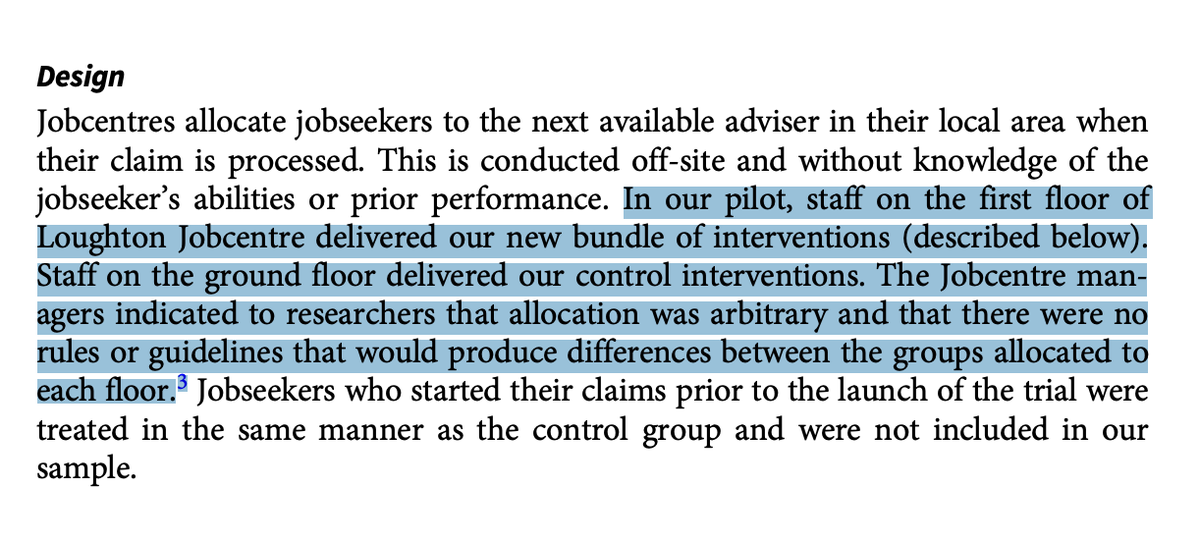

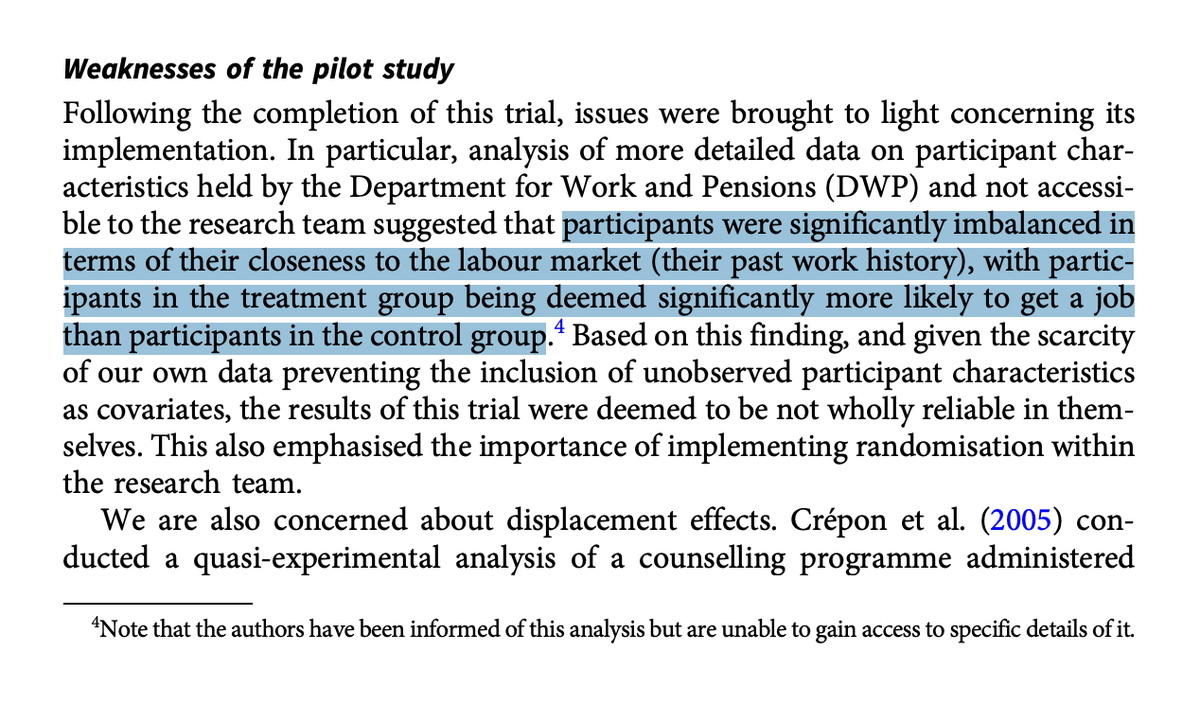

The first "experiment" was a pilot study in Loughton Jobcentre.

Treatment assignment was not randomized. It was based on whether a claimant was allocated to an adviser on the first floor or ground floor of the Jobcentre.

But don& #39;t worry, the allocation was arbitrary.

Treatment assignment was not randomized. It was based on whether a claimant was allocated to an adviser on the first floor or ground floor of the Jobcentre.

But don& #39;t worry, the allocation was arbitrary.

They also find a pretty big treatment effect on the, uh, control group? Once again raising the concern that the "invervention" here was basically a ton of political pressure to get people off benefits, rather than a new way of helping people look for work.

Never mind, that was just a pilot. Let& #39;s move on to the MAIN experiment. I& #39;m sure this one will be a lot more rigorous.

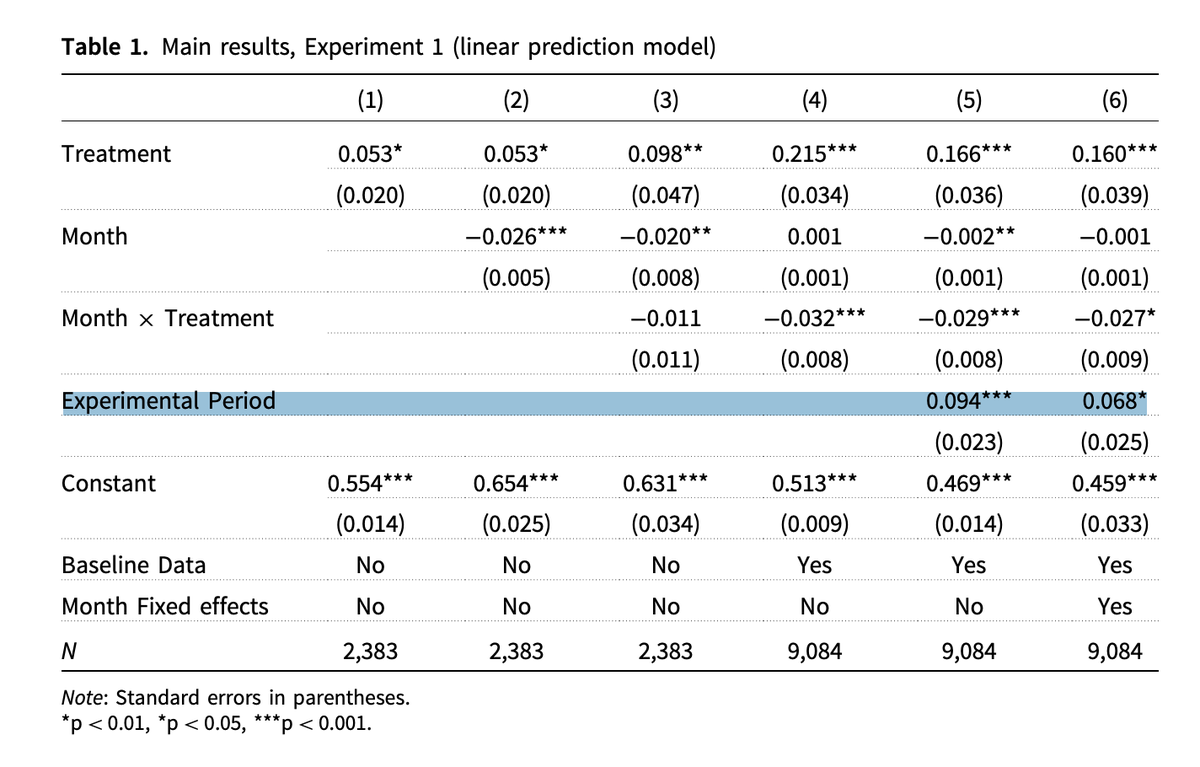

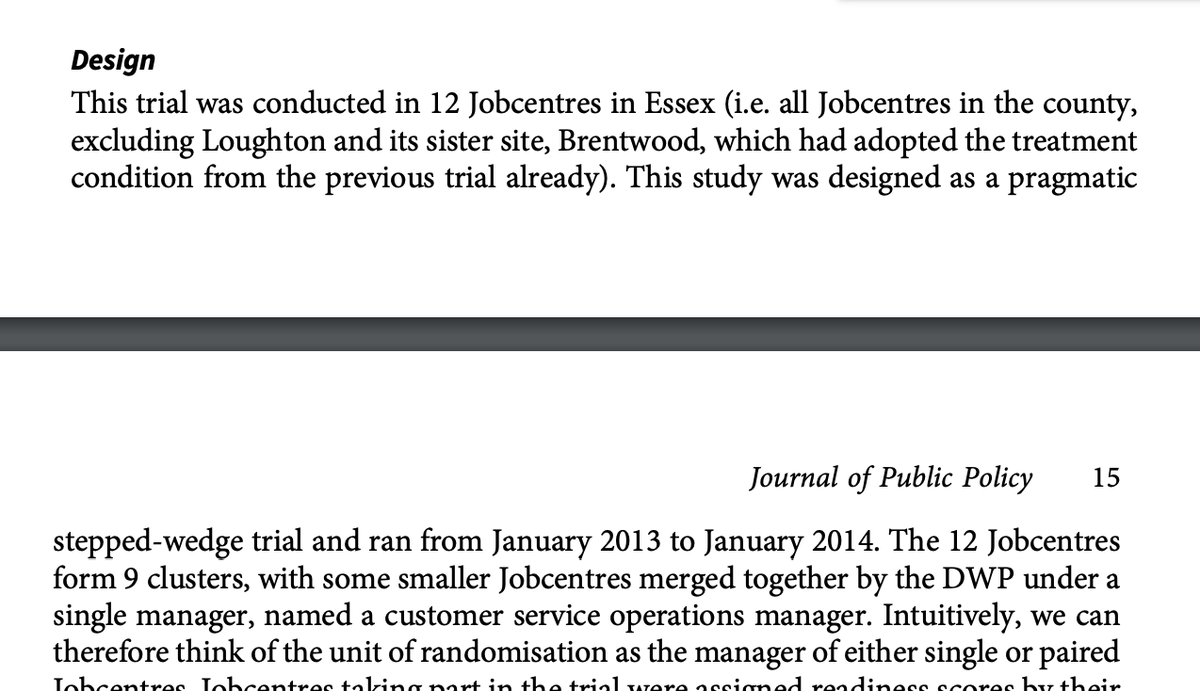

So to summarise, we have 9 clusters of Jobcentres. They have been put into 3 strata based on a subjective assessment of "readiness". The most "ready" clusters will receive the intervention first. But they& #39;re going to randomise the order within strata.

So there are two basic issues:

1. In any stepped wedge design, it& #39;s hard to disentangle the effect of treatment from a secular time trend. But it& #39;s even worse here because the roll-out explicitly conditions on Jobcentre readiness, which is plausibly related to the outcome.

1. In any stepped wedge design, it& #39;s hard to disentangle the effect of treatment from a secular time trend. But it& #39;s even worse here because the roll-out explicitly conditions on Jobcentre readiness, which is plausibly related to the outcome.

2. Number of clusters is way too small, we& #39;re talking like 3 in each strata? It is well-known that clustered standard errors behave badly when the number of clusters is small.

e.g. https://declaredesign.org/blog/2018-10-16-few-clusters.html">https://declaredesign.org/blog/2018...

e.g. https://declaredesign.org/blog/2018-10-16-few-clusters.html">https://declaredesign.org/blog/2018...

There& #39;s almost zero discussion of these very very obvious concerns in the paper. They don& #39;t even include strata fixed effects in the main results, you have to go to the appendix for that. No reference to, e.g. the wild cluster bootstrap.

Reviewer #2, where u at?

Reviewer #2, where u at?

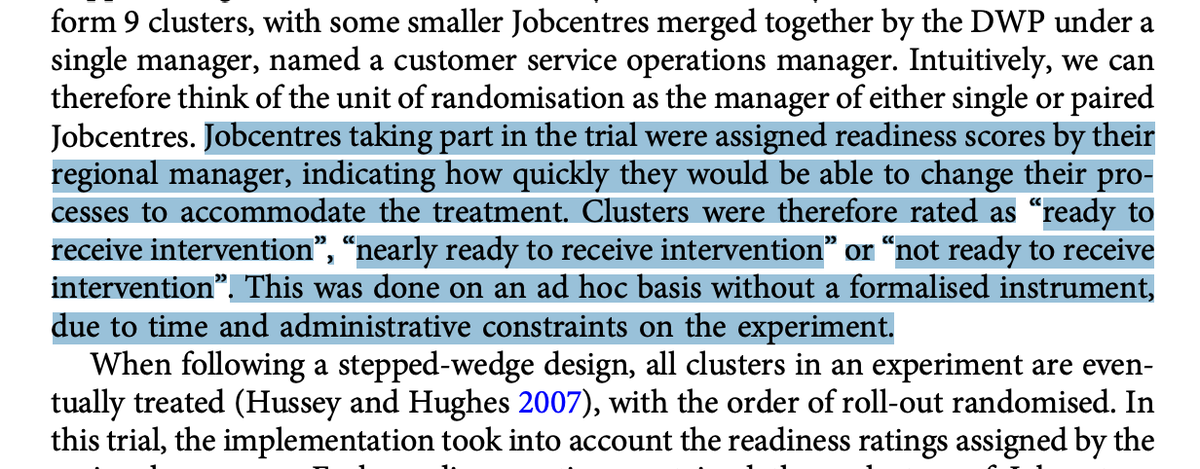

Here& #39;s their takeaway:

"it is not possible to be entirely unequivocal about these results"

but also

"we are therefore able to be unequivocal in arguing that its national rollout is justified and backed by evidence"

????

"it is not possible to be entirely unequivocal about these results"

but also

"we are therefore able to be unequivocal in arguing that its national rollout is justified and backed by evidence"

????

Here& #39;s a study where they lost 75% (!) of their data lmao

actually it& #39;s more like 82% ?? they recruited 4,000 participants but were only left with 750

Amazingly, this is actually the study that David Halpern cites when he was asked on a podcast what his main contribution to academic thought has been.

(Skip to 16:00) https://podtail.com/en/podcast/social-science-bites/david-halpern-on-nudging/">https://podtail.com/en/podcas...

(Skip to 16:00) https://podtail.com/en/podcast/social-science-bites/david-halpern-on-nudging/">https://podtail.com/en/podcas...

Actual quote (this is Halpern talking about research on social capital):

"The academic literature ran out of road at that point. But we have been able to work on actual interventions."

"The academic literature ran out of road at that point. But we have been able to work on actual interventions."

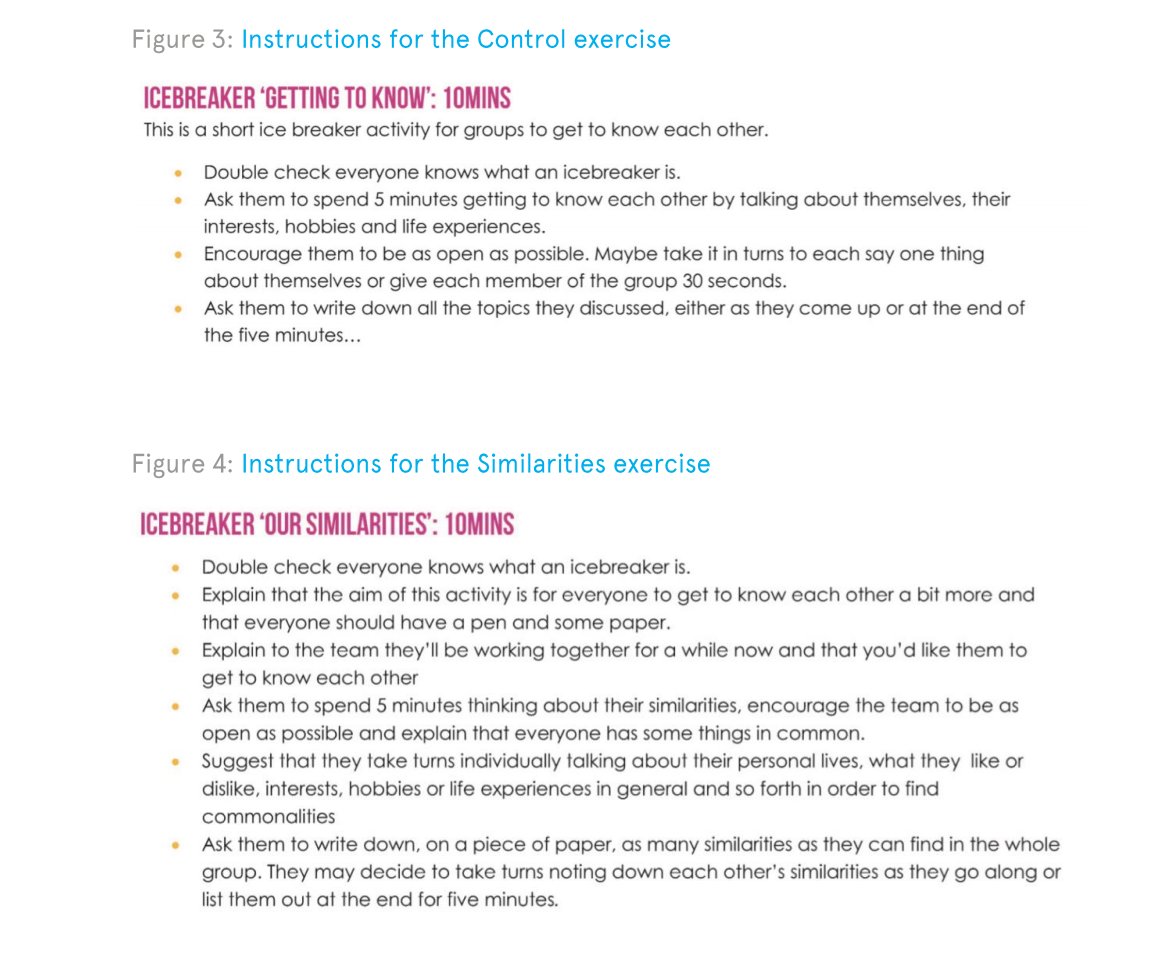

What is this intervention that the academic community has been wilfully ignoring?

Different types of 10 minute icebreakers.

Different types of 10 minute icebreakers.

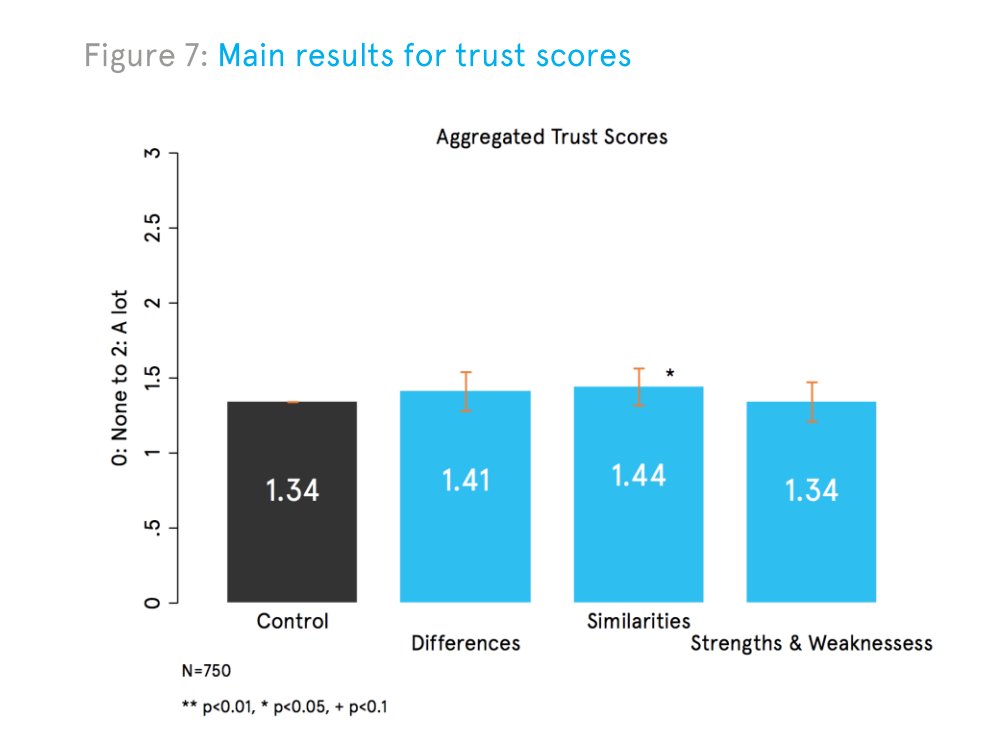

and, uh, here are the results  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧐" title="Face with monocle" aria-label="Emoji: Face with monocle">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧐" title="Face with monocle" aria-label="Emoji: Face with monocle">

Don& #39;t worry though, I& #39;m sure people like David Halpern and their spectacularly-sized egos aren& #39;t going to cause too much harm by, say, eroding public trust in science and ultimately causing the deaths of many people! Everything is fine! https://twitter.com/BBCMarkEaston/status/1237694665824047111?s=20">https://twitter.com/BBCMarkEa...

Read on Twitter

Read on Twitter Sometimes when the Nudge Unit cites credible academic research they... don& #39;t seem to have actually read it?Here& #39;s a blog post where they claim that mandatory sexual harassment training can backfire, with a link to this meta-analysis: https://www.bi.team/blogs/mor..." title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="2⃣" title="Keycap digit two" aria-label="Emoji: Keycap digit two"> Sometimes when the Nudge Unit cites credible academic research they... don& #39;t seem to have actually read it?Here& #39;s a blog post where they claim that mandatory sexual harassment training can backfire, with a link to this meta-analysis: https://www.bi.team/blogs/mor...">

Sometimes when the Nudge Unit cites credible academic research they... don& #39;t seem to have actually read it?Here& #39;s a blog post where they claim that mandatory sexual harassment training can backfire, with a link to this meta-analysis: https://www.bi.team/blogs/mor..." title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="2⃣" title="Keycap digit two" aria-label="Emoji: Keycap digit two"> Sometimes when the Nudge Unit cites credible academic research they... don& #39;t seem to have actually read it?Here& #39;s a blog post where they claim that mandatory sexual harassment training can backfire, with a link to this meta-analysis: https://www.bi.team/blogs/mor...">

Sometimes when the Nudge Unit cites credible academic research they... don& #39;t seem to have actually read it?Here& #39;s a blog post where they claim that mandatory sexual harassment training can backfire, with a link to this meta-analysis: https://www.bi.team/blogs/mor..." title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="2⃣" title="Keycap digit two" aria-label="Emoji: Keycap digit two"> Sometimes when the Nudge Unit cites credible academic research they... don& #39;t seem to have actually read it?Here& #39;s a blog post where they claim that mandatory sexual harassment training can backfire, with a link to this meta-analysis: https://www.bi.team/blogs/mor...">

Sometimes when the Nudge Unit cites credible academic research they... don& #39;t seem to have actually read it?Here& #39;s a blog post where they claim that mandatory sexual harassment training can backfire, with a link to this meta-analysis: https://www.bi.team/blogs/mor..." title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="2⃣" title="Keycap digit two" aria-label="Emoji: Keycap digit two"> Sometimes when the Nudge Unit cites credible academic research they... don& #39;t seem to have actually read it?Here& #39;s a blog post where they claim that mandatory sexual harassment training can backfire, with a link to this meta-analysis: https://www.bi.team/blogs/mor...">

It& #39;s not just problems with the experimental design. Studies published by the Nudge Unit show alarmingly frequent issues with basic implementation.Here& #39;s a study where they lost 75% (!) of their data lmao" title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="4⃣" title="Keycap digit four" aria-label="Emoji: Keycap digit four"> It& #39;s not just problems with the experimental design. Studies published by the Nudge Unit show alarmingly frequent issues with basic implementation.Here& #39;s a study where they lost 75% (!) of their data lmao">

It& #39;s not just problems with the experimental design. Studies published by the Nudge Unit show alarmingly frequent issues with basic implementation.Here& #39;s a study where they lost 75% (!) of their data lmao" title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="4⃣" title="Keycap digit four" aria-label="Emoji: Keycap digit four"> It& #39;s not just problems with the experimental design. Studies published by the Nudge Unit show alarmingly frequent issues with basic implementation.Here& #39;s a study where they lost 75% (!) of their data lmao">

It& #39;s not just problems with the experimental design. Studies published by the Nudge Unit show alarmingly frequent issues with basic implementation.Here& #39;s a study where they lost 75% (!) of their data lmao" title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="4⃣" title="Keycap digit four" aria-label="Emoji: Keycap digit four"> It& #39;s not just problems with the experimental design. Studies published by the Nudge Unit show alarmingly frequent issues with basic implementation.Here& #39;s a study where they lost 75% (!) of their data lmao">

It& #39;s not just problems with the experimental design. Studies published by the Nudge Unit show alarmingly frequent issues with basic implementation.Here& #39;s a study where they lost 75% (!) of their data lmao" title="https://abs.twimg.com/emoji/v2/... draggable="false" alt="4⃣" title="Keycap digit four" aria-label="Emoji: Keycap digit four"> It& #39;s not just problems with the experimental design. Studies published by the Nudge Unit show alarmingly frequent issues with basic implementation.Here& #39;s a study where they lost 75% (!) of their data lmao">

" title="and, uh, here are the results https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧐" title="Face with monocle" aria-label="Emoji: Face with monocle">" class="img-responsive" style="max-width:100%;"/>

" title="and, uh, here are the results https://abs.twimg.com/emoji/v2/... draggable="false" alt="🧐" title="Face with monocle" aria-label="Emoji: Face with monocle">" class="img-responsive" style="max-width:100%;"/>