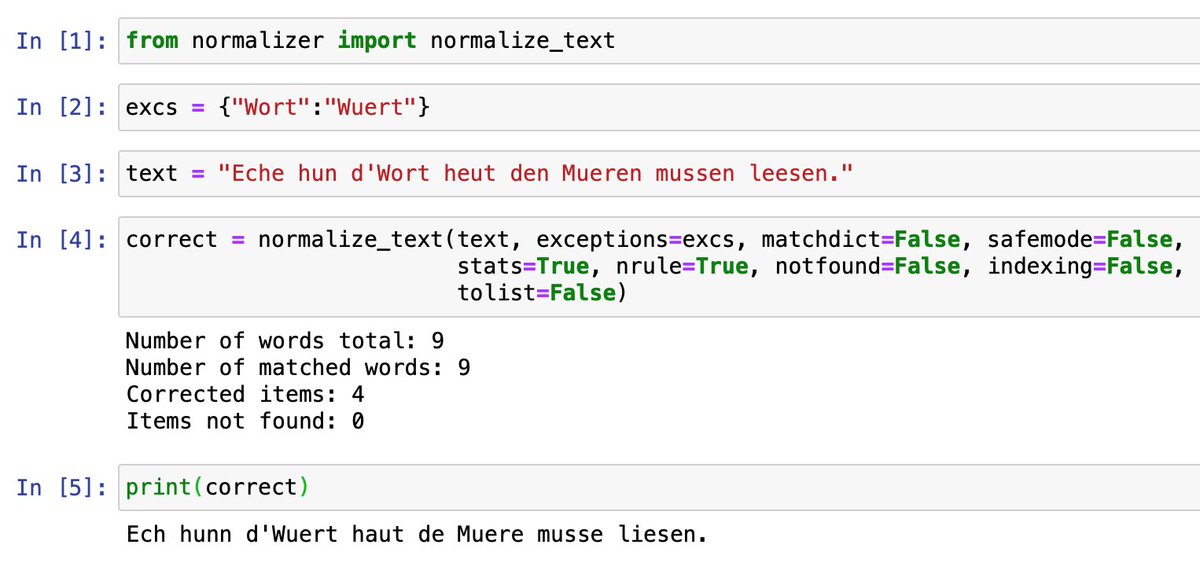

The fun of working with low-resource languages... First working prototype of an automatic orthographic correction tool for #Luxembourgish in #Python. Still a bit resource-heavy, and it needs better correction dicts but it’s a start. #NLProc #LowResLang #Datascience

The resources build on data from @RTLlu and the fabulous spellchecker by @weimerskirch

Cracked one of the challenging aspects of Lux. orthography today: deletion of word final -n except before vowels and certain consonants for phonetic reasons. That and tons of exceptions from the rule (eg, for nouns and names) have to be handled after the initial word correction.

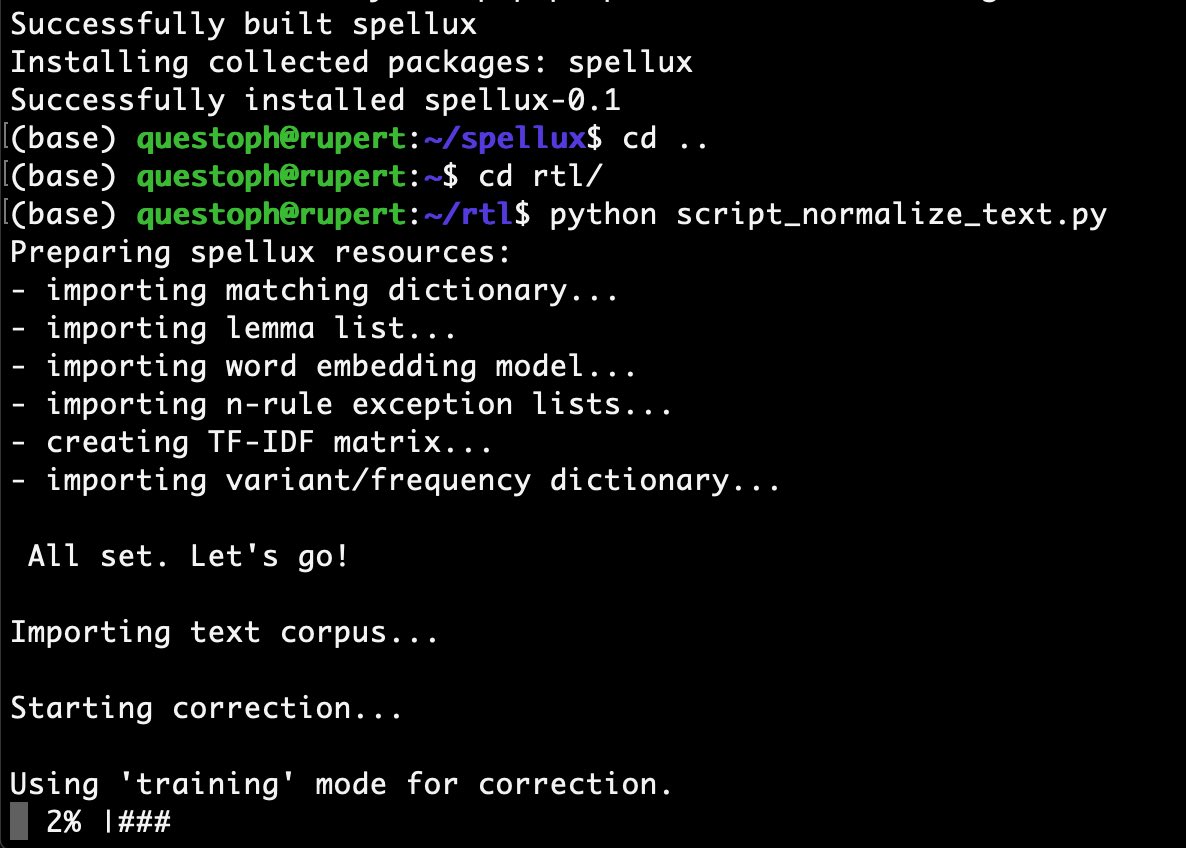

OK then, we are officially in training mode! Over the following days, I will experiment with some pretrained correction dictionaries and algorithms (tf-idf, norvig, word embedding, logged correction data). Plus, some remaining bugs for the n-rule need to be solved.

You know there’s something going on between you and Luxembourgish orthography if you are working in a Jupyter notebook called “deidiotify_n-rule”... Any suggestions, @den_ZLS?  https://abs.twimg.com/emoji/v2/... draggable="false" alt="😛" title="Face with tongue" aria-label="Emoji: Face with tongue">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😛" title="Face with tongue" aria-label="Emoji: Face with tongue">

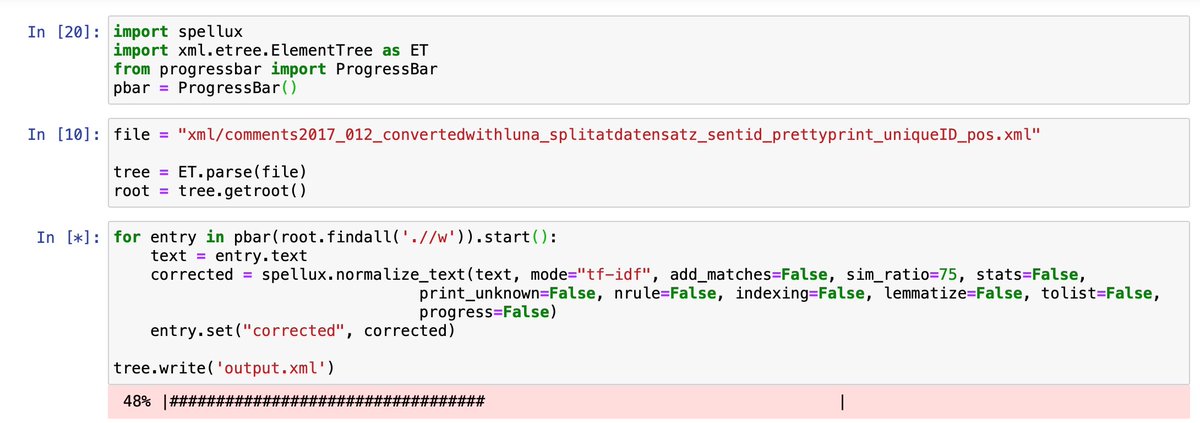

Another brick in the wall: upon request from the @ImpressoProject, I’ve been experimenting with a function to lemmatize texts after normalization. Pretty basic still (no POS filter or suffix ruleset), but it works smoothly for the most common words in Luxembourgish.

Now up: testing the #spellux package for word correction in xml files with user comments from @RTLlu. This will be the first real-life use of the package. The goal is to reduce orthographic variability in the dataset for the training of embedding models and our sentiment engine.

Aaand we have lift-off: first development build of the #spellux #Python package for automatic text normalization in now online. Still resource-heavy, and error rates are too high, but it is a start. Over the next months, we will test, expand, evaluate. https://github.com/questoph/spellux">https://github.com/questoph/...

I’ve been pushing smaller fixes and improvements to the spellux repository on GitHub. Package now works properly on Windows and captures more cases of old spelling variants. #Learningbydoing #Codingneverstops

While home office might not be good for motivation and moral, it certainly brings coding and bugfixing up to speed. As of today, version 0.1.2 is out with performance improvements for the lemmatizer and a new output parameter that includes output to json. #quarantinecoding

Putting the #spellux package to good use: development of average error rate in 540k @RTLlu user comments (2008-2018). This is standardization in practice!

#NLProc #LowResLang #Luxembourg

#NLProc #LowResLang #Luxembourg

Study with @PeterGilles to follow soon. To be fair, the package still produces some false positive and false negative corrections. But the trend is pretty stable even if we allow for a margin of error of 5% in the model.

Before we all get too excited here, these are the error rates normalized against the average text length. Things are still moving, but a bit slower than you had hoped, @RTLlu & @den_ZLS. So your intuition is still intact, @clarissawam https://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Winking face" aria-label="Emoji: Winking face">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Winking face" aria-label="Emoji: Winking face">

Read on Twitter

Read on Twitter

" title="Before we all get too excited here, these are the error rates normalized against the average text length. Things are still moving, but a bit slower than you had hoped, @RTLlu & @den_ZLS. So your intuition is still intact, @clarissawamhttps://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Winking face" aria-label="Emoji: Winking face">" class="img-responsive" style="max-width:100%;"/>

" title="Before we all get too excited here, these are the error rates normalized against the average text length. Things are still moving, but a bit slower than you had hoped, @RTLlu & @den_ZLS. So your intuition is still intact, @clarissawamhttps://abs.twimg.com/emoji/v2/... draggable="false" alt="😉" title="Winking face" aria-label="Emoji: Winking face">" class="img-responsive" style="max-width:100%;"/>