It is mindblowing that roughly the same protocol design for the Internet Protocol (IP) has taken the internet from nothing to ~15B connected devices and has survived for 40 years through the period of fastest technological change in history.

What lessons can we draw? A thread https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Down pointing backhand index" aria-label="Emoji: Down pointing backhand index">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👇" title="Down pointing backhand index" aria-label="Emoji: Down pointing backhand index">

What lessons can we draw? A thread

The internet has succeeded to the degree that it has for many reasons, but the most important one is the economic flywheel that it created: more providers of bandwidth, leads to more developers building applications, leads to more users who (via those apps) demand more bandwidth.

The core enabler of this flywheel—sustained through a period during which our basic technology for moving bits from A to B has improved by 1,000,000x—has been the radical minimalism and generality of IP.

Let’s walk through how it worked.

Let’s walk through how it worked.

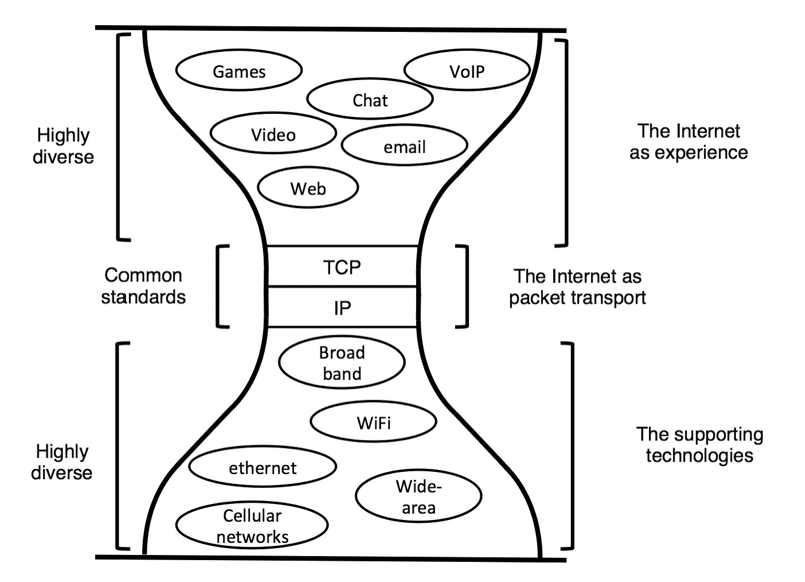

IP doesn& #39;t care about the kinds of applications that developers might build on top of it, nor does it care about the physical technologies (like cable, fiber, radio, etc) that people might use to implement it. If each node along the way speaks the protocol, everything still works

This is the defining property of the internet. At the core, it is agnostic (or "unopinionated& #39;) about everything that exists below and above it. And, by virtue of that, it has the ability to act as an inter-network—a universal connector of networks.

IP has thus come to be known as the "Narrow Waist of the Internet". It is the single protocol through which all traffic flows. Its most obvious advantage is that it decouples the application layer from the hardware layer so that each may evolve independently.

But, less obvious are the second order economic effects. Before IP, computer networks were fragmented. Each network had to be tailor-built to meet the needs of a specific application.

In contrast, IP acts as an aggregator. Through it, *any* networking technology installed by *any* service provider can support *any* application. By collapsing the entire computer networking world down to a single standard, the narrow waist does two things simultaneously:

(1) It allows the addressable market for the supply side—hardware vendors and service providers—to span the totality of all applications that might ever demand network connectivity.

And (2), it makes sure that any request from the demand side—applications seeking to send bits from A to B—could be serviced by any provider using whatever technology they want as long as it’s capable of moving bits from A to B.

In so doing, the narrow waist created the right conditions for the flywheel to get started. It bootstrapped a kind of marketplace for bandwidth with enough liquidity to make the economics make sense for both sides of the market.

But what are the implications of all of this for new protocols today?

Let’s talk about crypto.

Let’s talk about crypto.

Blockchains are computers. They are computers that are made up of a distributed network of physical machines that are owned and controlled by members of a broad community. This is what people mean when they say that they are decentralized—power over them is in the hands of many.

The defining property of these computers is that everyone can come to trust (and verify) that the programs that are deployed to them will run correctly, *as written*, no matter what. This is why these programs have come to be known as "smart contracts".

A better term for them, however, might be "sovereign programs" because, once deployed, they deterministically execute themselves, instruction by instruction, subject to nobody& #39;s authority.

They run with immunity from intervention by (1) the people who originally wrote them, (2) the people who interact with them while they are running, and (3) even the people who control the physical machines that execute them.

It is because of their inviolable sovereignty and transparency that such programs can credibly act as trusted intermediaries between strangers. In fact, this is precisely why a single program—i.e. Bitcoin—has the power to come alive as a fully digital, global store of value.

Bitcoin, however, is just the beginning. Smart contracts are a fundamentally new computational building block that is very general. They unlock an uncharted realm of possible applications that we have barely begun to explore.

For instance, it is already clear from the DeFi world on Ethereum that smart contracts have started to unlock a parallel financial system that is less reliant on top-down institutional trust. Instead, it builds upon the bottom-up, game theoretic guarantees of blockchain computers

And, there are countless other applications, many of which have nothing to do with finance, that are already in the works. Those, however, will have to be the topic of some other unconscionably long Twitter thread (:

So, that’s what crypto is all about in like eight Tweets, but what does any of that have to do with the history of the internet?

The key insight is that a narrow waist is likely to emerge for blockchains as well. Let’s call it the “Narrow Waist of Blockchain Computing.”

The key insight is that a narrow waist is likely to emerge for blockchains as well. Let’s call it the “Narrow Waist of Blockchain Computing.”

The natural place in the stack for it to emerge is the game theoretic structure at the heart of every blockchain that unifies its community into a single, self-policing network—its consensus mechanism.

Most blockchains today have consensus mechanisms that are highly opinionated. Their inner workings make prescriptive assumptions about how every other layer in the stack should work.

This kind of tight coupling limits the kinds of applications that can be built on top of them. Any computer that preordains the use of a specific programming paradigm or language, for example, unavoidably cuts down the design space of what people can build for it.

Just as importantly, coupling consensus with the rest of the stack also limits a network’s ability to evolve and incorporate new technologies as they emerge. For example, today we tend to use game theoretic tools to verify compute; tomorrow, cryptographic approaches may be better

There is an opportunity (which some projects are pursuing) to build a consensus mechanism that is minimal enough to act—like IP did for the internet—as the aggregator that decouples the application layer (smart contracts and user-facing clients) from the raw infrastructure layer.

Doing that might just be all that is needed to enable *any* service provider (miners/validators) using *any* technology for verifiable computation (optimistic rollups, zero knowledge proofs, etc) to service *any* application written in *any* programming language.

And, as with IP, a narrow waist at consensus sets up a similar two-sided network effect and flywheel. More nodes providing security/computation, leads to more developers building applications, leads to more users who (via those applications) demand more security/computation.

This suggests that there will be a winner-take-all dynamic at hand between projects building blockchain computers. The winning network may well be one which, at its core, is radically agnostic about the tech that is used below it and the kinds of programs that are written above.

It’s worth remembering that, at the time that the Internet Protocol was being designed in the 70s, countless competing standards (like ATM and XNS) that had more features (but were thus less modular and evolvable) ultimately lost against IP’s minimalism.

As with IP, a design for a consensus mechanism that places radical minimalism and modularity above all else will confer its parent blockchain computer with the strongest network effects and the greatest addressable market.

{fin}

{fin}

This was inspired by a few conversations with @martin_casado. Thanks to @BradUSV, Josh Williams, @skominers, @poojaks_, @danboneh, @juanbenet, and @cdixon for conversations/feedback about these ideas.

Read on Twitter

Read on Twitter