** #Tweetorial**

Today, I presented @SAA2020, on the use of compliance thresholds in intensive longitudinal data. This thread is for those who couldn’t’ make it.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚠️" title="Warnsignal" aria-label="Emoji: Warnsignal">Warning

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚠️" title="Warnsignal" aria-label="Emoji: Warnsignal">Warning https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚠️" title="Warnsignal" aria-label="Emoji: Warnsignal">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚠️" title="Warnsignal" aria-label="Emoji: Warnsignal">

This may upset ambulatory assessment researchers.

(1)

Today, I presented @SAA2020, on the use of compliance thresholds in intensive longitudinal data. This thread is for those who couldn’t’ make it.

This may upset ambulatory assessment researchers.

(1)

Ecological momentary assessment studies ask ppl to complete the same questions over & over again in their daily lives. Often 40-100+ times

Answering these questions isn’t for the faint of https://abs.twimg.com/emoji/v2/... draggable="false" alt="❤️" title="Rotes Herz" aria-label="Emoji: Rotes Herz"> & often leaves large % of missing data for some

https://abs.twimg.com/emoji/v2/... draggable="false" alt="❤️" title="Rotes Herz" aria-label="Emoji: Rotes Herz"> & often leaves large % of missing data for some

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤫" title="Schh!-Gesicht" aria-label="Emoji: Schh!-Gesicht">Even me trialing my own studies

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤫" title="Schh!-Gesicht" aria-label="Emoji: Schh!-Gesicht">Even me trialing my own studies

(2)

Answering these questions isn’t for the faint of

(2)

There are many different flavors of missingness:

1-Missing completely due to chance (missing completely at random)

2-Missing due to variable observed in the study (missing at random)

3-Missing due to an outside influence not measured in the study (missing not at random)

(3)

1-Missing completely due to chance (missing completely at random)

2-Missing due to variable observed in the study (missing at random)

3-Missing due to an outside influence not measured in the study (missing not at random)

(3)

Many of you are probably already aware that it& #39;s problematic to throw out an entire record b/c a single entry is missing (i.e. listwise deletion).

Listwise deletion:

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⛔️" title="Nicht betreten" aria-label="Emoji: Nicht betreten">Lowers power

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⛔️" title="Nicht betreten" aria-label="Emoji: Nicht betreten">Lowers power

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⛔️" title="Nicht betreten" aria-label="Emoji: Nicht betreten">Biases parameter estimates

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⛔️" title="Nicht betreten" aria-label="Emoji: Nicht betreten">Biases parameter estimates

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⛔️" title="Nicht betreten" aria-label="Emoji: Nicht betreten">Affects standard error estimation

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⛔️" title="Nicht betreten" aria-label="Emoji: Nicht betreten">Affects standard error estimation

(3)

Listwise deletion:

(3)

However, in 2020 the "recommended" practice in ambulatory assessment is use and justify compliance thresholds.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Denkendes Gesicht" aria-label="Emoji: Denkendes Gesicht">Hmm, so we "should":

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🤔" title="Denkendes Gesicht" aria-label="Emoji: Denkendes Gesicht">Hmm, so we "should":

Do more than just throw out incomplete observations, & throw out many completed rows of data

Data that ppl painstakingly responded to?

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😵" title="Benommenes Gesicht" aria-label="Emoji: Benommenes Gesicht">

https://abs.twimg.com/emoji/v2/... draggable="false" alt="😵" title="Benommenes Gesicht" aria-label="Emoji: Benommenes Gesicht">

(4)

Do more than just throw out incomplete observations, & throw out many completed rows of data

Data that ppl painstakingly responded to?

(4)

Excluding those struggling the most

E.G. I study depression.

Those with

Using these arbitrary thresholds systematically eliminates many of the people I want to study

(5)

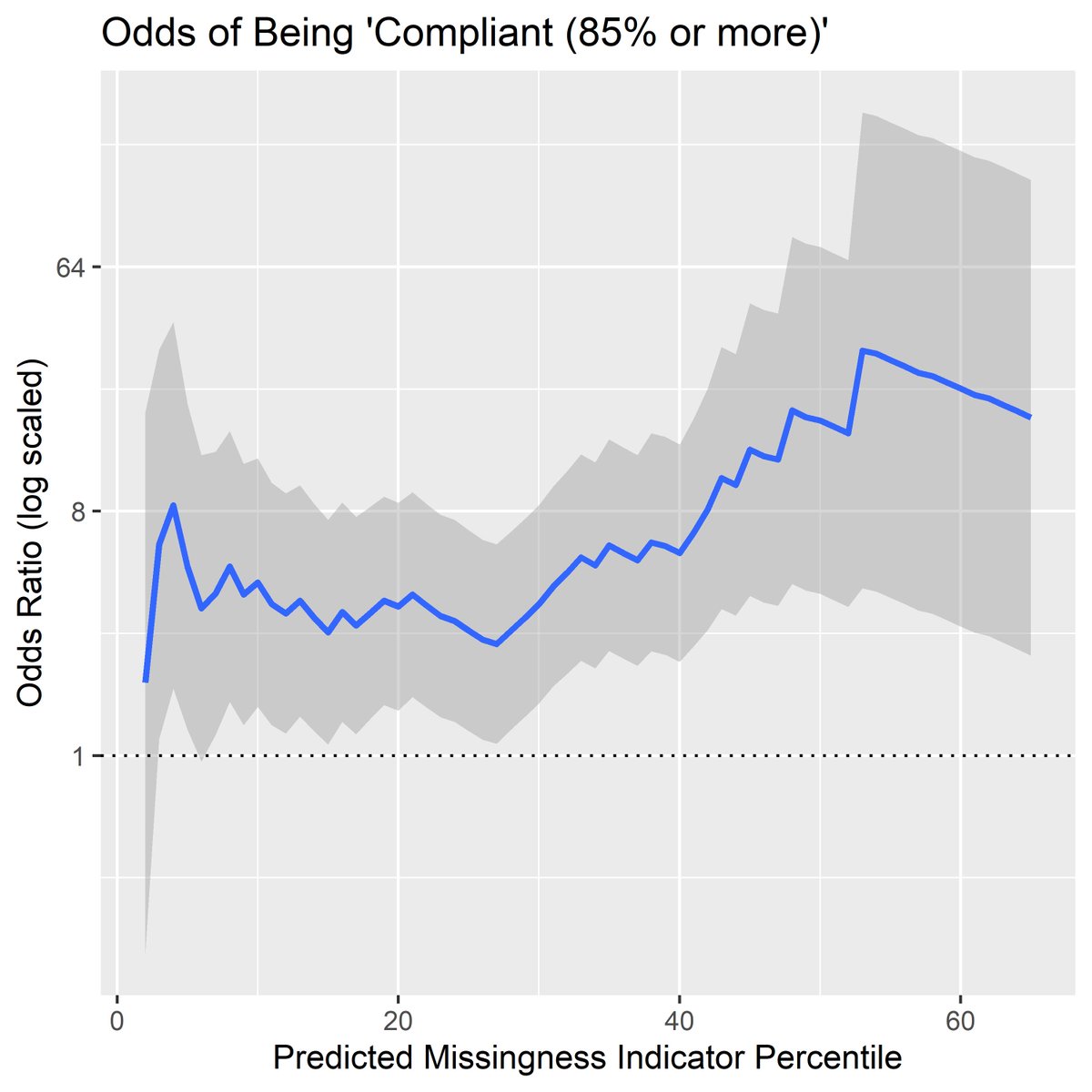

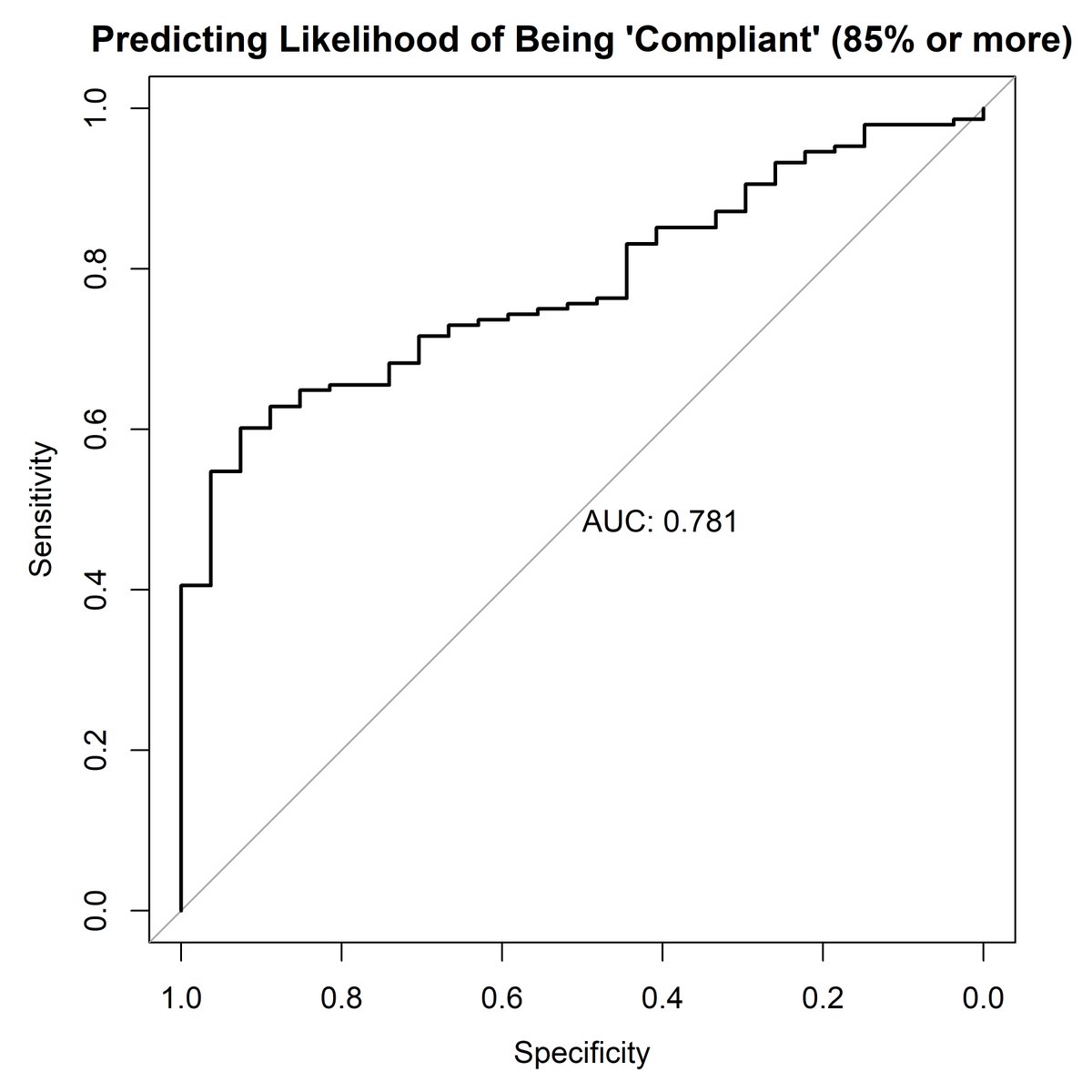

In a 50-day daily diary study in a sample of 176 people:

I studied whether I could predict who would have high compliance based on individual differences in personality, affect, and psychopathology traits using #MachineLearning

(6)

I studied whether I could predict who would have high compliance based on individual differences in personality, affect, and psychopathology traits using #MachineLearning

(6)

I could predict those with high compliance w/ high precision (AUC = 0.78)

Those in the predicted top half of the range score were 15x more likely to be “compliant” than those in the bottom half.

(7)

Those in the predicted top half of the range score were 15x more likely to be “compliant” than those in the bottom half.

(7)

This means that data are *NOT* missing due to chance.

When we throw out ppl’s responses by using compliance thresholds, we are introducing bias as to who is included in our studies

(8)

When we throw out ppl’s responses by using compliance thresholds, we are introducing bias as to who is included in our studies

(8)

So what do are researchers to do to here?

My co-authors and I have found that proper model-based missing data strategies can help offset the impact of missing data in intensive longitudinal data studies:

https://tandfonline.com/doi/abs/10.1080/10705511.2017.1417046?journalCode=hsem20

(9)">https://tandfonline.com/doi/abs/1...

My co-authors and I have found that proper model-based missing data strategies can help offset the impact of missing data in intensive longitudinal data studies:

https://tandfonline.com/doi/abs/10.1080/10705511.2017.1417046?journalCode=hsem20

(9)">https://tandfonline.com/doi/abs/1...

Appropriate methods can  https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬆️" title="Pfeil nach oben" aria-label="Emoji: Pfeil nach oben">% missing even in complicated multivariate models with high lags (70%+ here):

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬆️" title="Pfeil nach oben" aria-label="Emoji: Pfeil nach oben">% missing even in complicated multivariate models with high lags (70%+ here):

https://link.springer.com/article/10.3758%2Fs13428-018-1101-0

My">https://link.springer.com/article/1... simulations w/ 90%+ missing data can achieve:

https://abs.twimg.com/emoji/v2/... draggable="false" alt="✅" title="Fettes weißes Häkchen" aria-label="Emoji: Fettes weißes Häkchen">Good point estimates

https://abs.twimg.com/emoji/v2/... draggable="false" alt="✅" title="Fettes weißes Häkchen" aria-label="Emoji: Fettes weißes Häkchen">Good point estimates

https://abs.twimg.com/emoji/v2/... draggable="false" alt="✅" title="Fettes weißes Häkchen" aria-label="Emoji: Fettes weißes Häkchen">Good SEs

https://abs.twimg.com/emoji/v2/... draggable="false" alt="✅" title="Fettes weißes Häkchen" aria-label="Emoji: Fettes weißes Häkchen">Good SEs

More important than % missing:

# of complete observations

(10)

https://link.springer.com/article/10.3758%2Fs13428-018-1101-0

My">https://link.springer.com/article/1... simulations w/ 90%+ missing data can achieve:

More important than % missing:

# of complete observations

(10)

I am calling for researchers to  https://abs.twimg.com/emoji/v2/... draggable="false" alt="🛑" title="Stop sign" aria-label="Emoji: Stop sign">STOP

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🛑" title="Stop sign" aria-label="Emoji: Stop sign">STOP https://abs.twimg.com/emoji/v2/... draggable="false" alt="🛑" title="Stop sign" aria-label="Emoji: Stop sign"> this practice!

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🛑" title="Stop sign" aria-label="Emoji: Stop sign"> this practice!

Do: Use all the observations in the study from all participants!

Do: Use appropriate model-based missingness strategies

Do: Use passive sensing to give you more information about participants

(11)

Do: Use all the observations in the study from all participants!

Do: Use appropriate model-based missingness strategies

Do: Use passive sensing to give you more information about participants

(11)

Reviewers/Editors, you https://abs.twimg.com/emoji/v2/... draggable="false" alt="🛑" title="Stop sign" aria-label="Emoji: Stop sign"> too!

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🛑" title="Stop sign" aria-label="Emoji: Stop sign"> too!

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚫" title=""Betreten verboten!"-Zeichen" aria-label="Emoji: "Betreten verboten!"-Zeichen">Penalize studies that find

https://abs.twimg.com/emoji/v2/... draggable="false" alt="🚫" title=""Betreten verboten!"-Zeichen" aria-label="Emoji: "Betreten verboten!"-Zeichen">Penalize studies that find  https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬆️" title="Pfeil nach oben" aria-label="Emoji: Pfeil nach oben">missingness

https://abs.twimg.com/emoji/v2/... draggable="false" alt="⬆️" title="Pfeil nach oben" aria-label="Emoji: Pfeil nach oben">missingness

This could be a naturally occurring based on the population being studied. Evaluating study quality by % missingness WILL bias literature.

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👍" title="Thumbs up" aria-label="Emoji: Thumbs up"> Do: Ask for all persons to be included in analyses

https://abs.twimg.com/emoji/v2/... draggable="false" alt="👍" title="Thumbs up" aria-label="Emoji: Thumbs up"> Do: Ask for all persons to be included in analyses

(12)

This could be a naturally occurring based on the population being studied. Evaluating study quality by % missingness WILL bias literature.

(12)

My slides are all available here:

http://www.nicholasjacobson.com/talk/saa2020_compliance_thresholds/

Paper">https://www.nicholasjacobson.com/talk/saa2... & more data will be coming soon to a journal near you.

Thanks to all who have already suffered by twitter rants, including @FallonRGoodman, @aidangcw, @aaronjfisher, among others

(/end rant)

#SAA2020aus

http://www.nicholasjacobson.com/talk/saa2020_compliance_thresholds/

Paper">https://www.nicholasjacobson.com/talk/saa2... & more data will be coming soon to a journal near you.

Thanks to all who have already suffered by twitter rants, including @FallonRGoodman, @aidangcw, @aaronjfisher, among others

(/end rant)

#SAA2020aus

Read on Twitter

Read on Twitter Warninghttps://abs.twimg.com/emoji/v2/... draggable="false" alt="⚠️" title="Warnsignal" aria-label="Emoji: Warnsignal">This may upset ambulatory assessment researchers.(1)" title="** #Tweetorial**Today, I presented @SAA2020, on the use of compliance thresholds in intensive longitudinal data. This thread is for those who couldn’t’ make it. https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚠️" title="Warnsignal" aria-label="Emoji: Warnsignal">Warninghttps://abs.twimg.com/emoji/v2/... draggable="false" alt="⚠️" title="Warnsignal" aria-label="Emoji: Warnsignal">This may upset ambulatory assessment researchers.(1)" class="img-responsive" style="max-width:100%;"/>

Warninghttps://abs.twimg.com/emoji/v2/... draggable="false" alt="⚠️" title="Warnsignal" aria-label="Emoji: Warnsignal">This may upset ambulatory assessment researchers.(1)" title="** #Tweetorial**Today, I presented @SAA2020, on the use of compliance thresholds in intensive longitudinal data. This thread is for those who couldn’t’ make it. https://abs.twimg.com/emoji/v2/... draggable="false" alt="⚠️" title="Warnsignal" aria-label="Emoji: Warnsignal">Warninghttps://abs.twimg.com/emoji/v2/... draggable="false" alt="⚠️" title="Warnsignal" aria-label="Emoji: Warnsignal">This may upset ambulatory assessment researchers.(1)" class="img-responsive" style="max-width:100%;"/>