Links to some real world examples of equalitarian/political biases leading literatures to be purged of "distasteful" results. From ... psychological science! ("Hey, its science! Are you a science denier?").

Thread ending in END. https://twitter.com/PsychRabble/status/1213462601155387393">https://twitter.com/PsychRabb...

Thread ending in END. https://twitter.com/PsychRabble/status/1213462601155387393">https://twitter.com/PsychRabb...

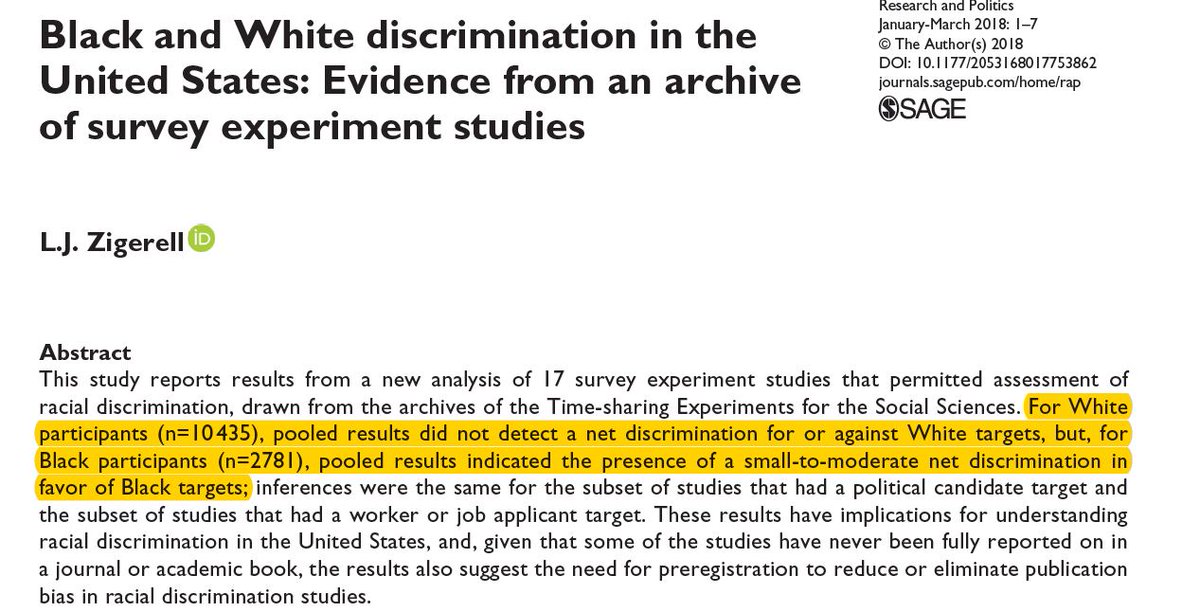

Zigerell& #39;s @LJZigerell great forensic work uncovering 17 UNPUBLISHED-yes, countem 17, national experiments w/representative samples, totaling>10k participants finding...ready?...no evidence of racial bias among whites (but ingroup favoritism among blacks).

https://journals.sagepub.com/doi/full/10.1177/2053168017753862">https://journals.sagepub.com/doi/full/...

https://journals.sagepub.com/doi/full/10.1177/2053168017753862">https://journals.sagepub.com/doi/full/...

More selective reporting:

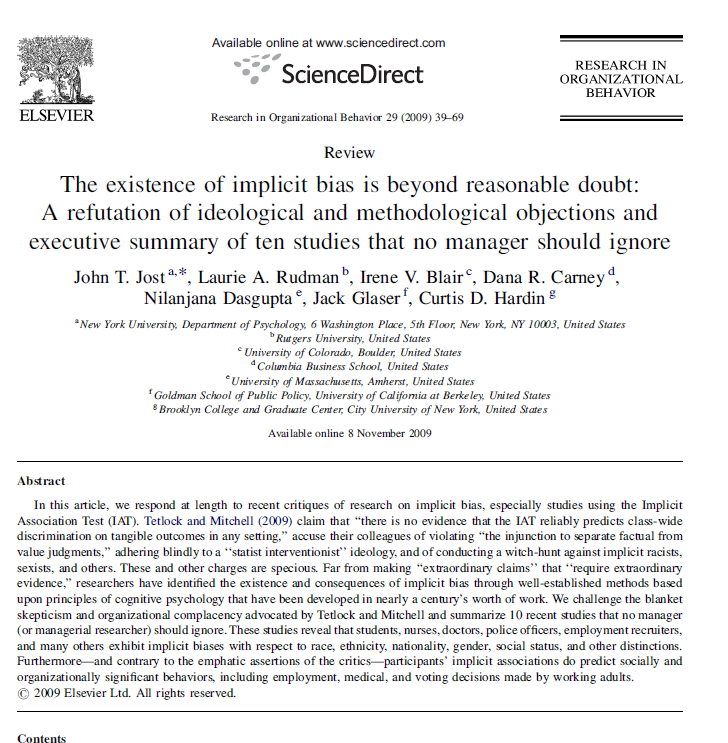

This paper by Jost et al, responding to a paper by Blanton et al arguing that the race IAT ain& #39;t what its cracked up to be, reported "10 studies no mgr should ignore" on the supposed reality of implicit bias.

This paper by Jost et al, responding to a paper by Blanton et al arguing that the race IAT ain& #39;t what its cracked up to be, reported "10 studies no mgr should ignore" on the supposed reality of implicit bias.

So where& #39;s the selective reporting? They just kinda forgot to tell you that:

7/10 papers did not address race bias (instead? drug users, poli candidates, etc).

7/10 papers did not address race bias (instead? drug users, poli candidates, etc).

Of the 3 papers that did address racial bias?

They reported 4 studies:

1. two found no evid of racial bias at all.

2. 1 did not test whether the small bias found statistically differed from egalitarian responding.

3. 1 found bias in 1/4 conditions (no bias in 3/4).

They reported 4 studies:

1. two found no evid of racial bias at all.

2. 1 did not test whether the small bias found statistically differed from egalitarian responding.

3. 1 found bias in 1/4 conditions (no bias in 3/4).

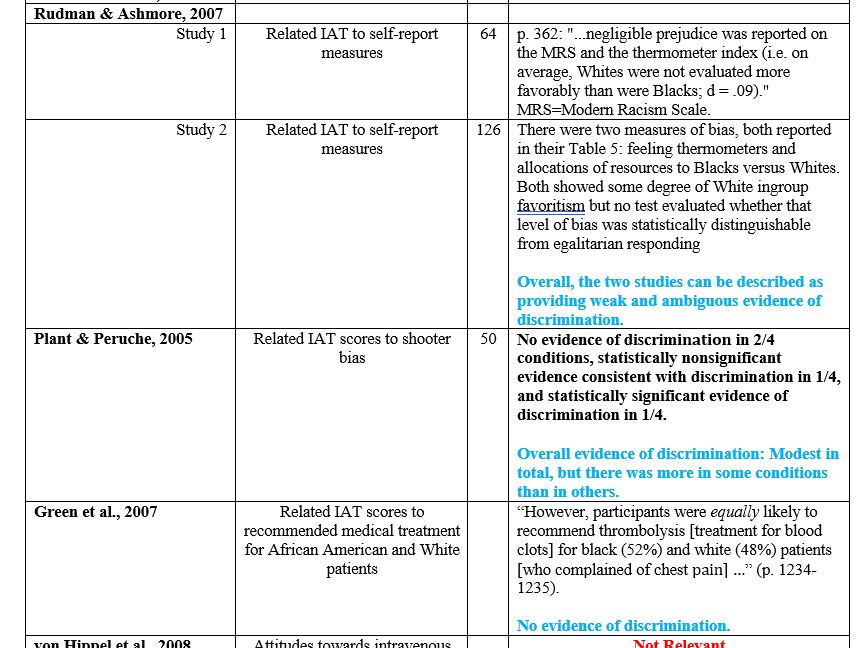

We reviewed this state of affairs in this paper:

https://osf.io/3jk6z/

Here">https://osf.io/3jk6z/&qu... is an excerpt from our table summarizing the results Jost et al simply did not report from those 3 papers:

(click to see whole excerpt; full table in the paper).

https://osf.io/3jk6z/

Here">https://osf.io/3jk6z/&qu... is an excerpt from our table summarizing the results Jost et al simply did not report from those 3 papers:

(click to see whole excerpt; full table in the paper).

Often, selective reporting hides in plain sight. You just have to do an itsy bitsy teenie weenie bit of actual thinking to see it.

For example, if you see a headline "6% of Americans really are alt-right! OMG WE ARE ALL GONNA DIE FROM WHITE SUPREMACY!" remember: the actual result shows that 94% are NOT alt-right. There has always been/always will be radical fringe groups, left&right.

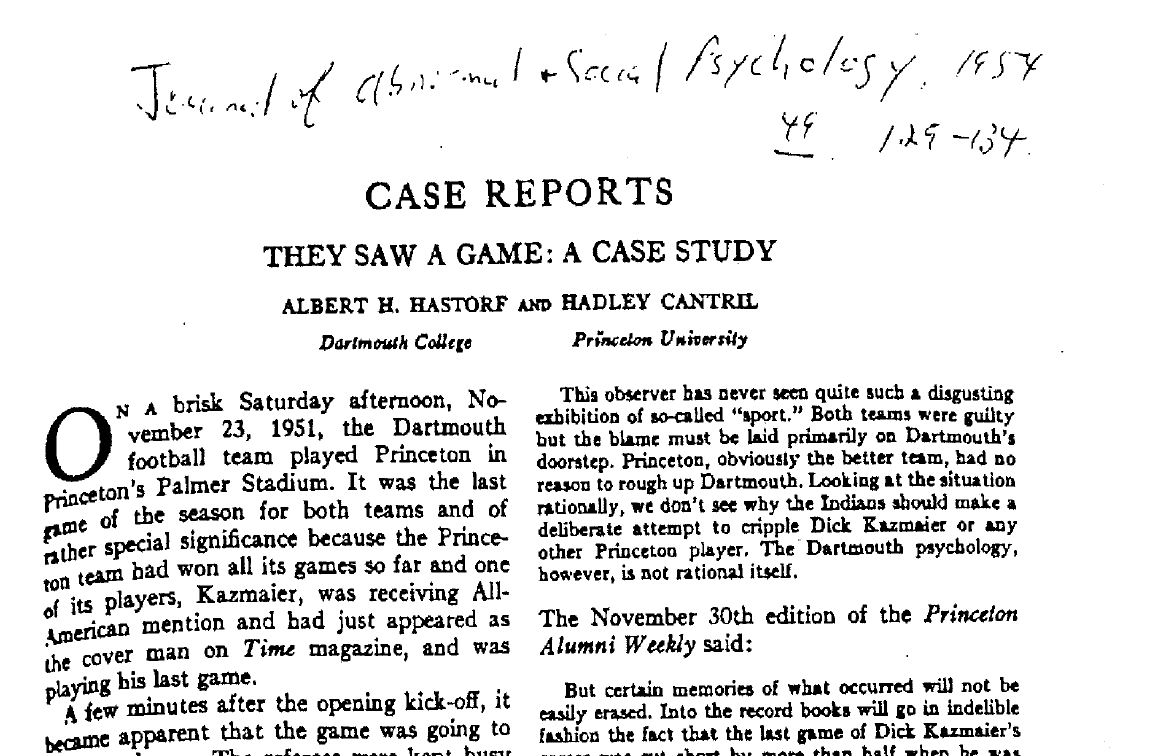

1 of my fav examples of a selective result hiding in plain sight comes from this famous paper, which claimed to show there is no reality, everything is subjective perception. Its 1 of the most famous papers in early social psych:

The article was on the supposedly vastly differing perceptions of a controversial football game between Dartmouth&Princeton in the 1950s (when the Ivies were powerhouses).

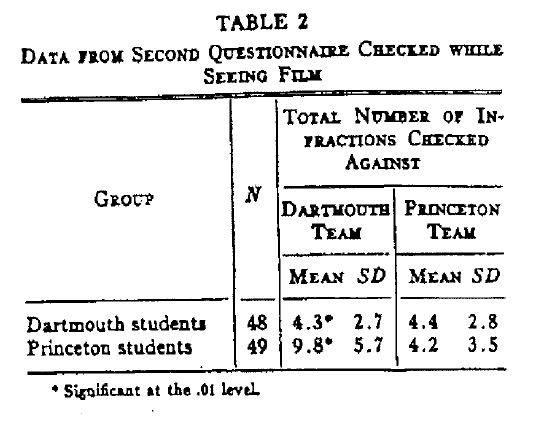

From the article. Perception is so subjective that:

"There is no such & #39;thing& #39; as a & #39;game& #39; existing & #39;out there& #39; in itis own right which people merely & #39;observe... the & #39;thing& #39; is *not* the same for different people...& #39;"

"There is no such & #39;thing& #39; as a & #39;game& #39; existing & #39;out there& #39; in itis own right which people merely & #39;observe... the & #39;thing& #39; is *not* the same for different people...& #39;"

On what grounds did they these extraordinary and radical conclusions? Other than some wild newspaper reports, the only data on anything objectively verifiable they had showed that Princeton students saw Dartmouth as committing about 6 more infractions than Dartmouth students saw.

So what& #39;s hiding in plain sight?

HOW MUCH OF THE GAME THEY SAW THAT WAS IDENTICAL!

Most football games have well over 100 plays. Even if we assume as superslow game w/only 80 plays, there was no bias on 74 of them. 74/80 = 92.5%.

HOW MUCH OF THE GAME THEY SAW THAT WAS IDENTICAL!

Most football games have well over 100 plays. Even if we assume as superslow game w/only 80 plays, there was no bias on 74 of them. 74/80 = 92.5%.

So at least 92% of the time, there was no bias in perceps(infractions). This is the basis for an extreme conclusion that "there is no such thing as a game" because of radical subjectivity?

Citation biases can be viewed as a form of reporting bias: The author(s) simply do not report evidence contradicting their claims or theory.

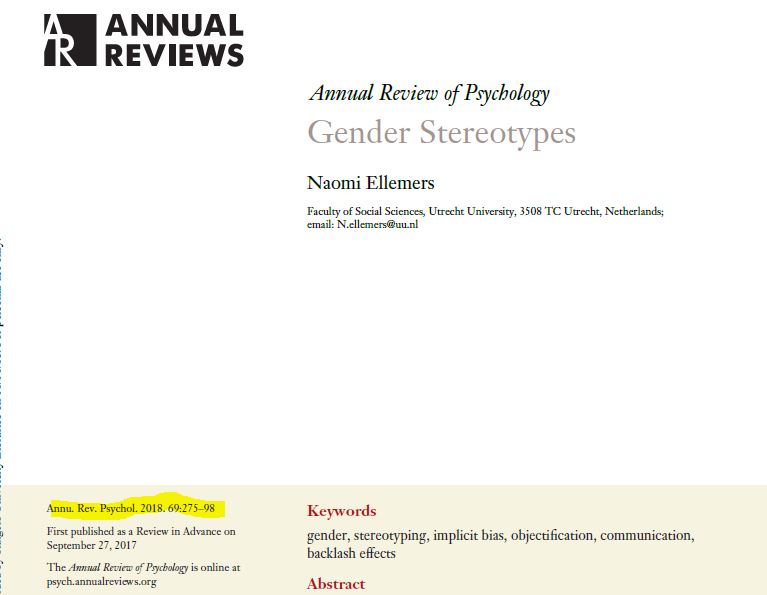

The most egregious example I know is this review in the prestigious and high impact Annual Review of Psychology, which concluded that gender stereotypes were mostly inaccurate:

How did it reach that conclusion? Literally, by ignoring 11 papers reporting 16 studies that assessed gender stereotype accuracy. I reported that state of affairs -- including full references to all 11 ignored papers, here: https://www.psychologytoday.com/us/blog/rabble-rouser/201806/gender-stereotypes-are-inaccurate-if-you-ignore-the-data">https://www.psychologytoday.com/us/blog/r...

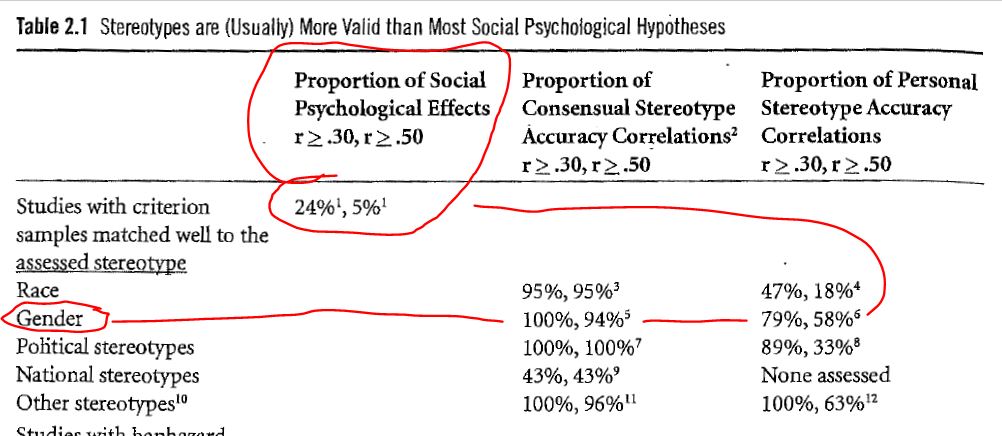

What did those 11 papers find? Here is an excerpt from a table reporting those results. Note the table& #39;s title:

"Stereotypes are (Usually) More Valid than Most Social Psychological Hypotheses"

"Stereotypes are (Usually) More Valid than Most Social Psychological Hypotheses"

The paper that reports that table is available here:

http://sites.rutgers.edu/lee-jussim/wp-content/uploads/sites/135/2019/05/one-of-the-largest.pdf">https://sites.rutgers.edu/lee-jussi...

http://sites.rutgers.edu/lee-jussim/wp-content/uploads/sites/135/2019/05/one-of-the-largest.pdf">https://sites.rutgers.edu/lee-jussi...

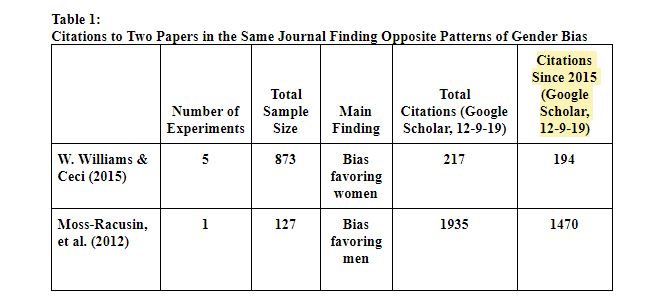

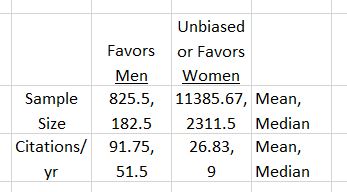

Here is yet another example. In 2012, Moss-Racusin et al published a paper in PNAS showing pro-male gender bias in science fields hiring a lab mgr. In 2015, Williams&Ceci published a paper showing pro-female gender bias across STEM fields in faculty hiring.

Note that, only counting AFTER Williams & Ceci came out, M-R has been cited over 7x more often. In other words, well over 1000 papers cite M-R w/o even mentioning W&C.

What& #39;s even more extraordinary is the superiority of W&C on virtually all quantifiable measures of methods quality:

W&C: 5 studies, N=873, within&between subject designs, multiple operationalizations of applicant quality.

M-R: 1 study, N=127, between subjects, 1 operationalization of applicant quality.

M-R: 1 study, N=127, between subjects, 1 operationalization of applicant quality.

This issue does not seem to be restricted to this particular pair of studies. I blogged about this here. Routinely, studies finding pro-male bias in peer review are:

1. lower methodological quality

2. More highly cited

than studies finding pro-female bias

https://www.psychologytoday.com/us/blog/rabble-rouser/201906/scientific-bias-in-favor-studies-finding-gender-bias">https://www.psychologytoday.com/us/blog/r...

1. lower methodological quality

2. More highly cited

than studies finding pro-female bias

https://www.psychologytoday.com/us/blog/rabble-rouser/201906/scientific-bias-in-favor-studies-finding-gender-bias">https://www.psychologytoday.com/us/blog/r...

In that essay, I report this table**, which shows sample size and citations by direction of bias found. Far smaller samples but higher citations for studies finding pro-male bias.

**full references can be found in a separate essay, which is linked.

**full references can be found in a separate essay, which is linked.

So far, most of what is above can be viewed as "equalitarian" biases; a quasi-religious (dogmatic, non-disconfirmable) belief in the power of discrimination. I mean, if you "know" discrim is powerful/pervasive, why cite a study showing no bias? It "must" be wrong!

But these biases are not restricted to equalitarian biases. This famous paper (also by Jost et al) reported a meta-analysis showing conservatives WAY MORE rigid/dogmatic than liberals.

Jost et al 2003:

Studies in meta-analysis: 88, citations since 2011: 3060

Van Hiel et al 2010:

Studies in meta-analysis: 127, citations since 2011: 173

Studies in meta-analysis: 88, citations since 2011: 3060

Van Hiel et al 2010:

Studies in meta-analysis: 127, citations since 2011: 173

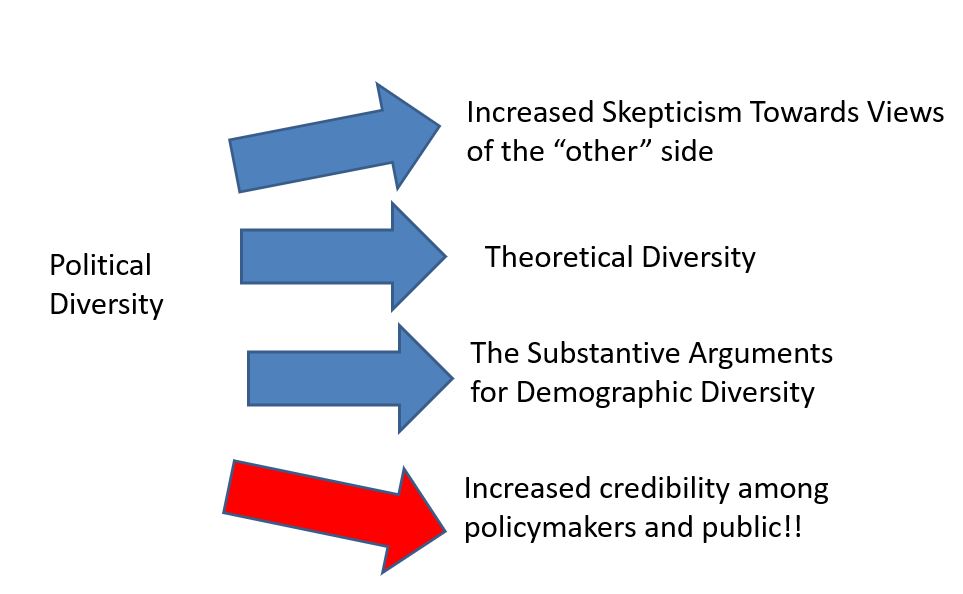

There& #39;s a vigorous science reform effort in psychology, but it focuses almost exclusively on methods, stats, transparency practice. This is good.

But there are literally no improvements in measurement, transparency or stats capable of solving these problems.

But there are literally no improvements in measurement, transparency or stats capable of solving these problems.

Short of trashing the whole system and rebuilding from scratch, what might?

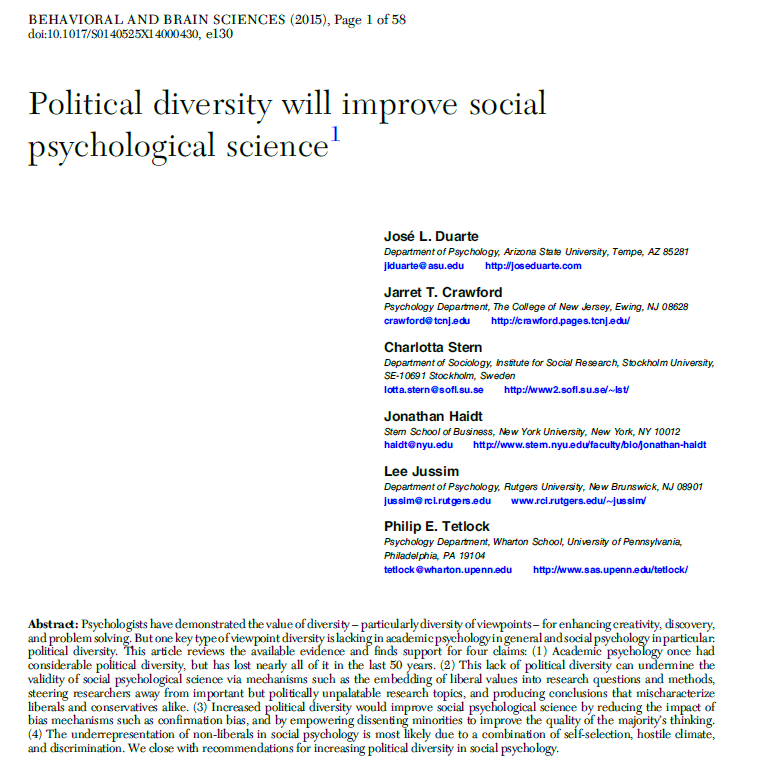

Well, if the problem is political/equalitarian biases and blindspots, then:

Well, if the problem is political/equalitarian biases and blindspots, then:

@threadreaderapp unroll.

Read on Twitter

Read on Twitter