1/ A decade ago we weren’t sure neural nets could ever deal with language.

Now the latest AI models crush language benchmarks faster than we can come up with language benchmarks.

Far from having an AI winter, we are having a second AI spring.

Now the latest AI models crush language benchmarks faster than we can come up with language benchmarks.

Far from having an AI winter, we are having a second AI spring.

2/ The first AI spring is of course ImageNet. Created by @drfeifei and team in 2009, it was the first large scale image classification problem for photos instead of handwriting and thumbnails.

In 2012, AlexNet using GPUs took 1st place. In 2015, ResNet reached human performance.

In 2012, AlexNet using GPUs took 1st place. In 2015, ResNet reached human performance.

3/ In the years that followed neural nets made strong progress on voice recognition and machine translation.

Baidu’s Deep Speech 2 recognized spoken Chinese on-par with humans. Google’s Neural-Machine-Translation beat existing phrase-based translation system by 60%.

Baidu’s Deep Speech 2 recognized spoken Chinese on-par with humans. Google’s Neural-Machine-Translation beat existing phrase-based translation system by 60%.

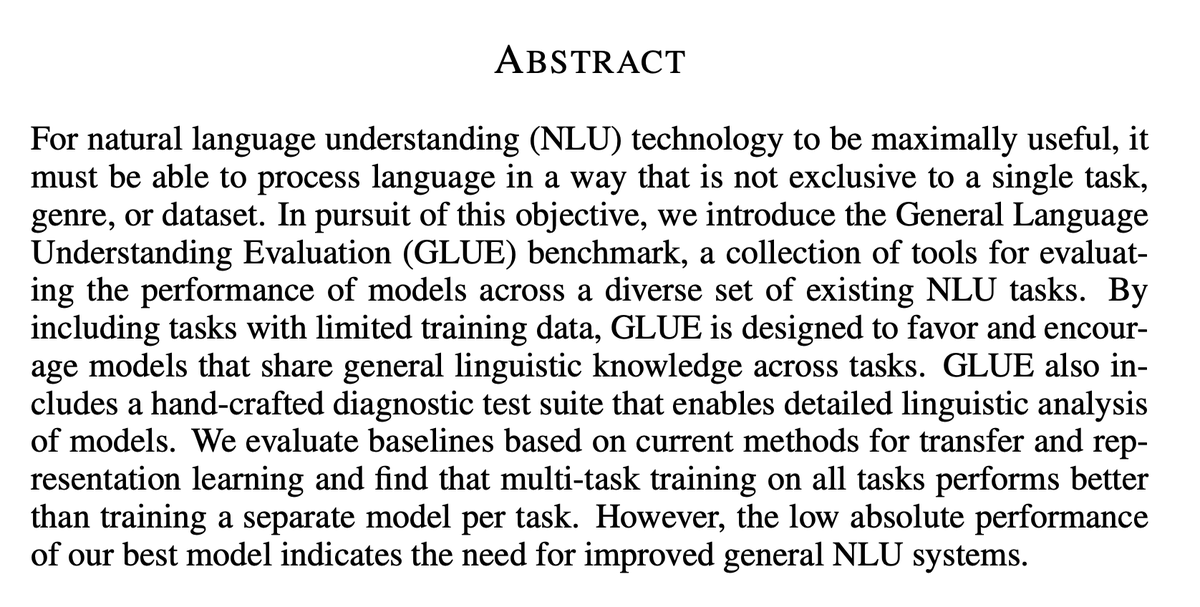

4/ In language understanding, neural nets did well in single tasks such as WikiQA, TREC, and SQuAD but it wasn’t clear they could master a range of tasks like humans.

u2028Thus GLUE was created—a set of 9 diverse language tasks that hopefully would keep researchers busy for years.

u2028Thus GLUE was created—a set of 9 diverse language tasks that hopefully would keep researchers busy for years.

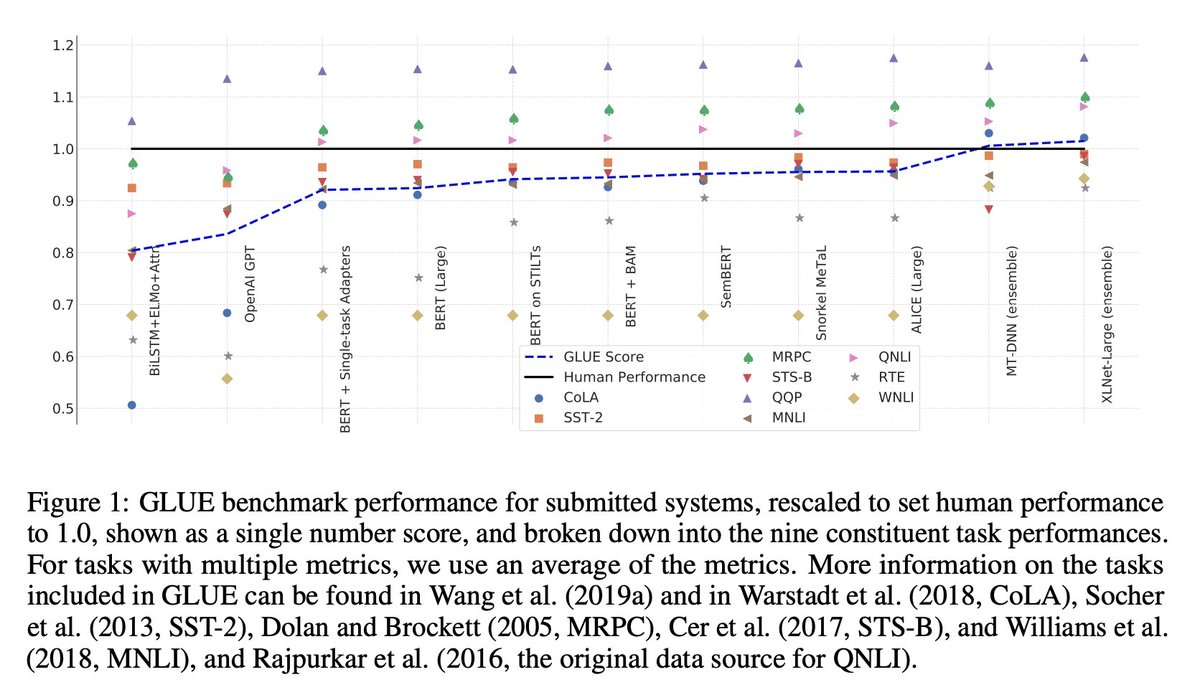

5/ It took six years for neural nets to catch up to human performance in ImageNet.

Transformer based neural nets (BERT, GPT) beat human performance in GLUE in less than one year.

Transformer based neural nets (BERT, GPT) beat human performance in GLUE in less than one year.

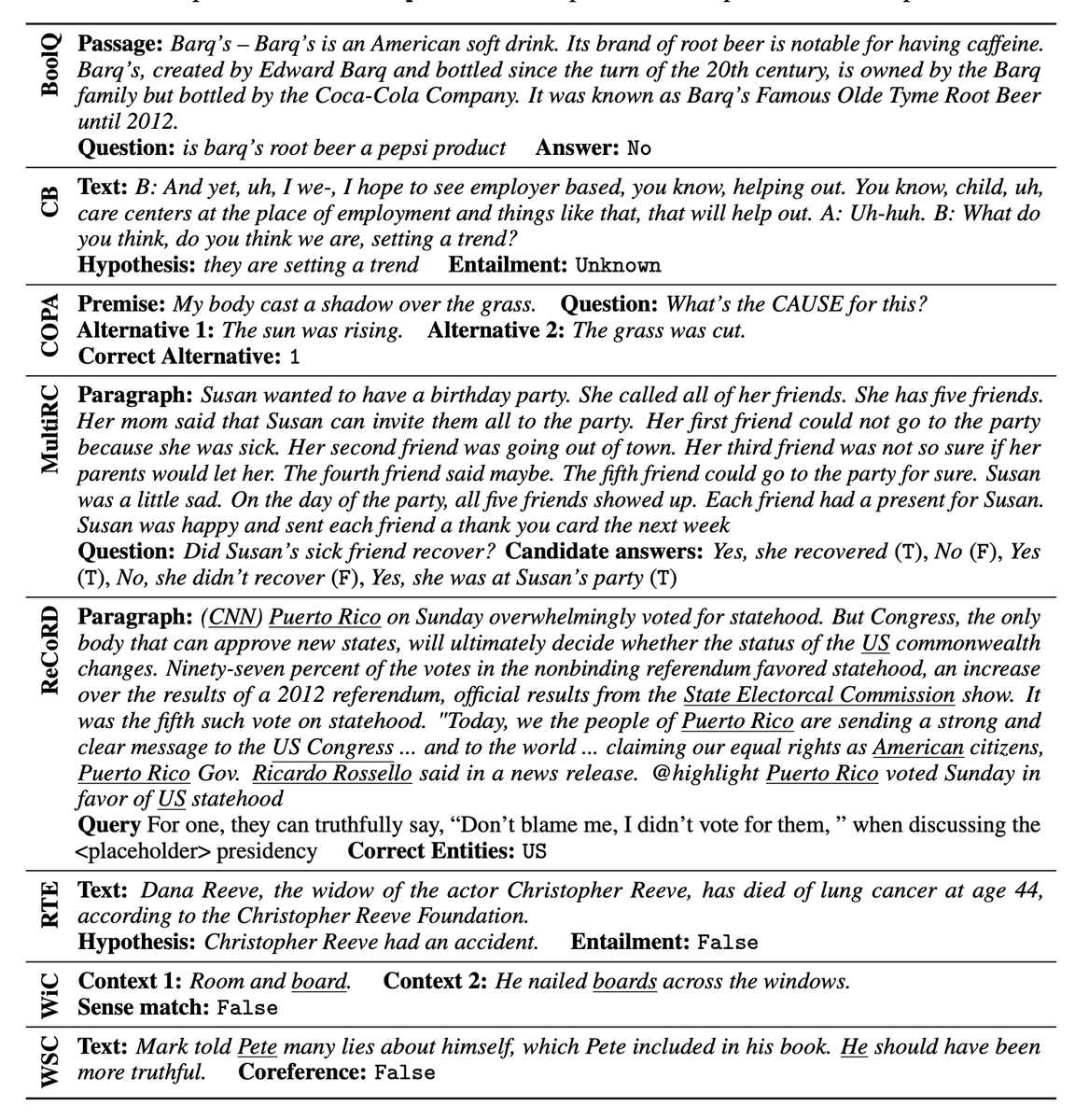

6/ Progress in language-understanding was so rapid, the authors of GLUE was forced to create a new version of the benchmark “SuperGLUE” in 2019.

SuperGlue is HARD, far harder than a naive Turing Test. Just look at these prompts:

SuperGlue is HARD, far harder than a naive Turing Test. Just look at these prompts:

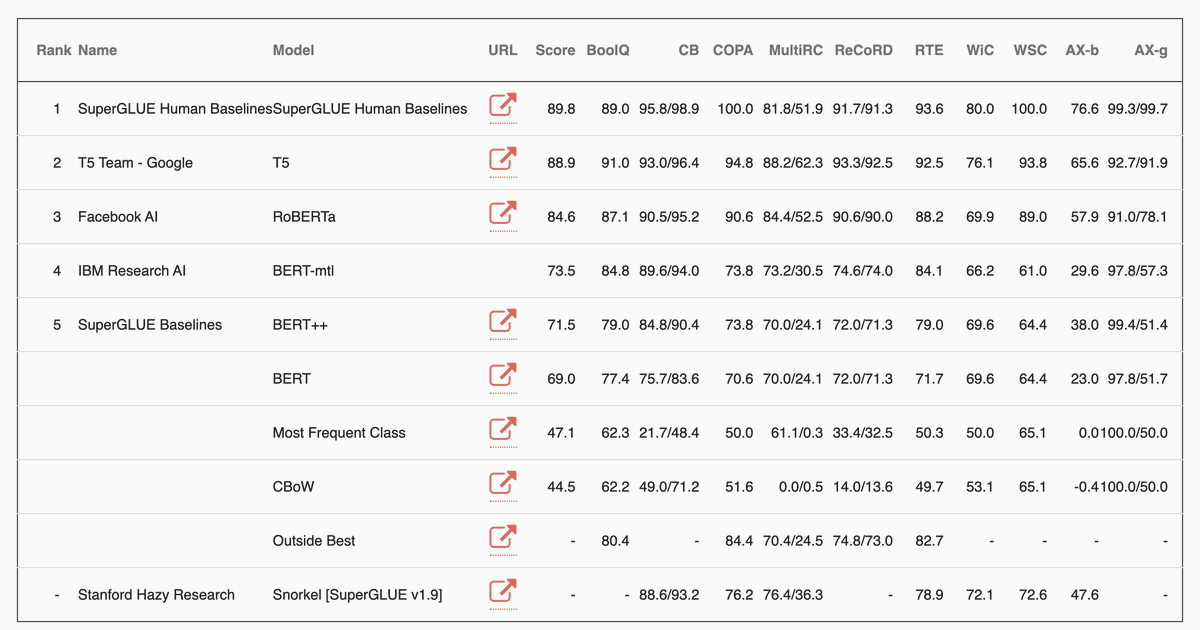

7/ Will SuperGLUE stand the test of time? It appears not. Six months in and the Google T5 model is within 1% of human performance.

8/ Neural nets are beating language benchmarks faster than benchmarks can be created.

Yet first hand experience contradicts this progress—Alexa/Siri/Google still lack basic common sense.

Why? Is it a matter of time to deployment or are diverse human questions just much harder?

Yet first hand experience contradicts this progress—Alexa/Siri/Google still lack basic common sense.

Why? Is it a matter of time to deployment or are diverse human questions just much harder?

Read on Twitter

Read on Twitter