[THREAD] There is a lot of twitter buzz about this new paper on gender and race bias in student evaluations.

Well, I have some comments *about this paper*

1/ https://www.cambridge.org/core/journals/ps-political-science-and-politics/article/exploring-bias-in-student-evaluations-gender-race-and-ethnicity/91670F6003965C5646680D314CF02FA4">https://www.cambridge.org/core/jour...

Well, I have some comments *about this paper*

1/ https://www.cambridge.org/core/journals/ps-political-science-and-politics/article/exploring-bias-in-student-evaluations-gender-race-and-ethnicity/91670F6003965C5646680D314CF02FA4">https://www.cambridge.org/core/jour...

First, the good: The basic research design is great. The classes are online courses that were run by a single admin throughout the semester. The only difference was the brief video intro by the professor. This is a really clean way to run a realistic quasi-experimental design. 2/

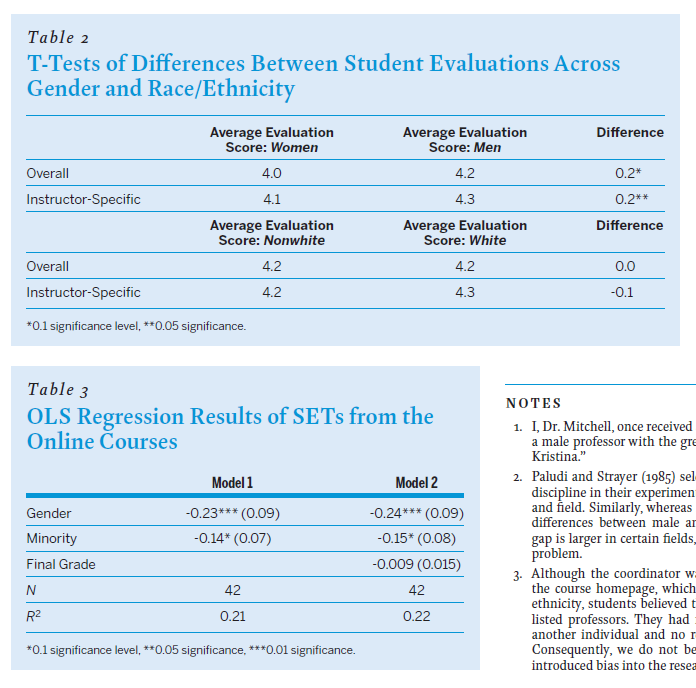

Now for the less good: The overall sample size is small -- 14 professors. The analysis used t-test to determine differences in overall course ratings by gender and race, and then a regression to see how gender and race uniquely predict course/prof ratings. 3/

With such a small sample size, these analyses are really, really under-powered. Not to mention that the t-test for gender is comparing 11 men vs. 3 women. A more appropriate analysis would have been multi-level modeling given that the data are NESTED by course. 4/

Now the worst: Look at the actual results. There is NO effect of race (p=.1 sig level!!). The authors & #39;relaxed their significance criteria because of the small sample.& #39; WHAT?! The effect of gender is sig at p=.05 level, but remember how skewed the gender balance is (11 vs. 3). 5/

Overall, IMO this paper shows a good proof of concept of a good research design, while the results are nearly useless as to whether there is bias in student evaluations by race and gender /end

Read on Twitter

Read on Twitter![[THREAD] There is a lot of twitter buzz about this new paper on gender and race bias in student evaluations. Well, I have some comments *about this paper* 1/ https://www.cambridge.org/core/jour... [THREAD] There is a lot of twitter buzz about this new paper on gender and race bias in student evaluations. Well, I have some comments *about this paper* 1/ https://www.cambridge.org/core/jour...](https://pbs.twimg.com/media/EKEY4NfUEAA__za.png)