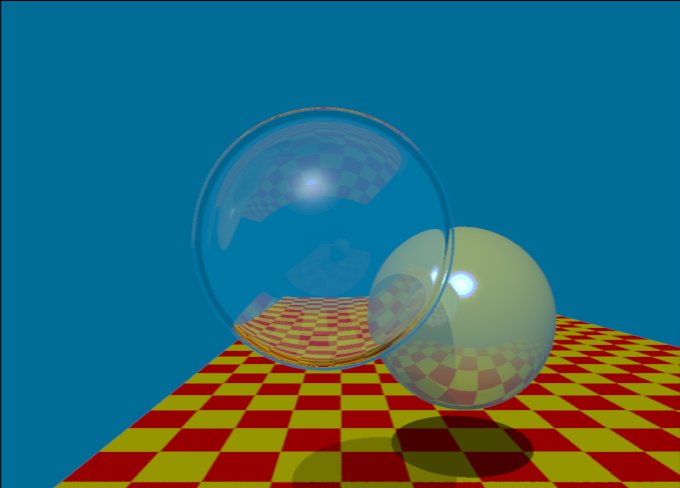

Turner Whitted& #39;s first ray-traced image from 1979 took 74 minutes to compute on a 1 MHz mainframe. Computer graphics includes problems of the digital and analog domains.

Although graphics is done on digital computers, the model of a scene can be viewed as a symbolic analog representation that is sampled by the rendering process -- casting rays for example -- we evaluate a function f(u,v) of radiance in screen coordinates.

A problem arises when we violate Nyquist& #39;s condition and sample an image with frequencies higher than half the sampling rate. Texture filtering and level of detail attempt to reduce the "bandwidth" of the model. But other means must be used to mitigate this problem.

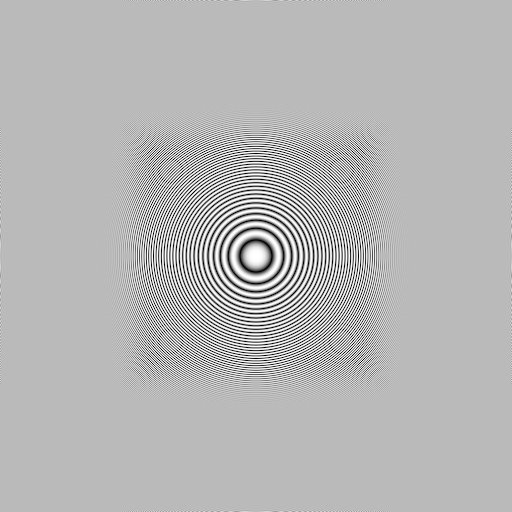

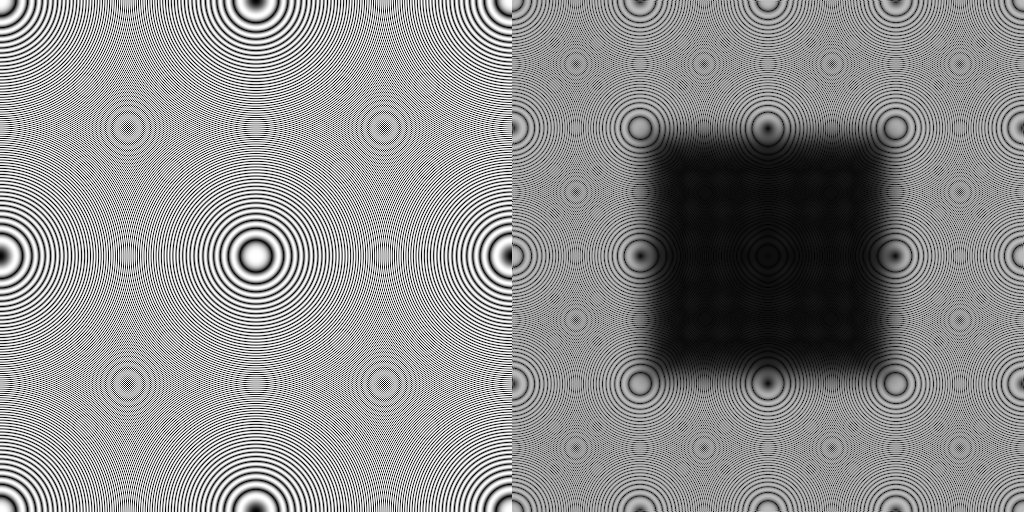

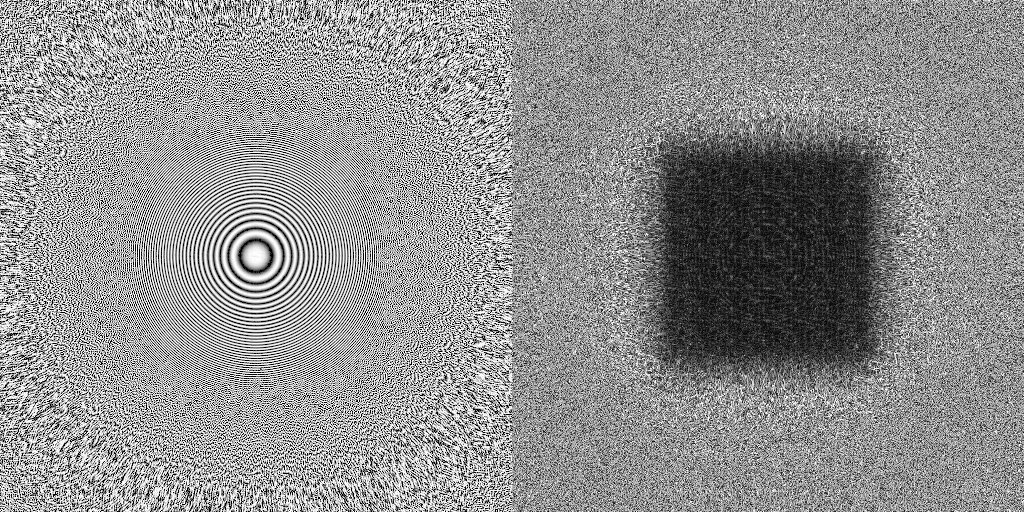

A good test case is the zone plate, sin(r**2), a simple image of radial waves that increase in frequency until they cannot be represented by the pixel rate. (click to enlarge these images to full size, or they will look bad)

When we sample the zone plate at the pixel rate, moire patterns occur when the wave goes above the Nyquist frequency limit. We can subtract the zone plate from our sampled image (left) to see just the error (right) --the high frequencies appear as lower "alias" frequencies.

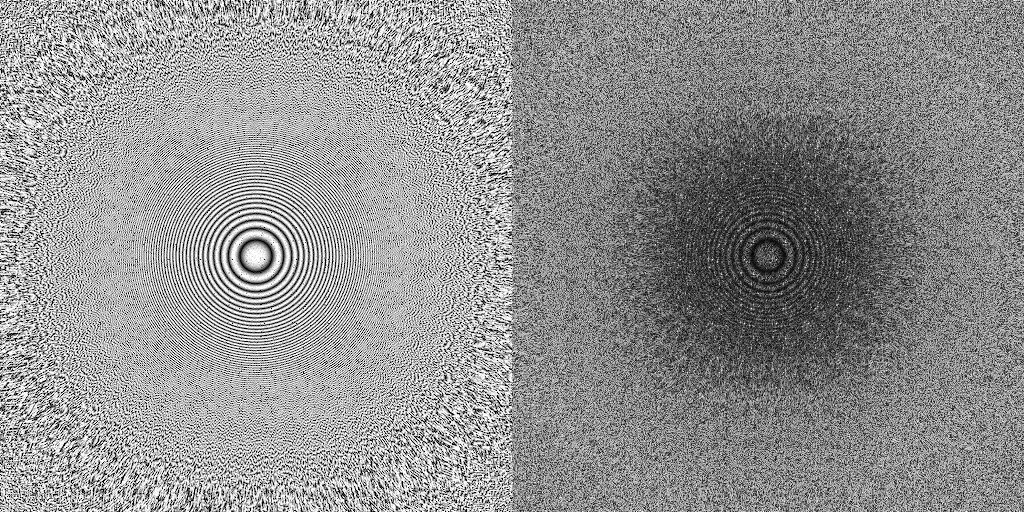

In the mid 1980s, researchers at Lucasfilm/Pixar and at Bell Laboratories (i.e., yours truly) proposed a way to mitigate aliasing by sampling with a high-frequency nonuniform pattern called "blue noise"

Sampling an image with this pattern still gets aliasing, but instead of conspicuous moire patterns, it is randomized into noise. A problem is that noise also appears below the Nyquist frequency, in the parts of the image that should be alias-free.

That problem of low frequency noise can be reduced by solving the "reconstruction" problem -- properly translating nonuniform image-sample values to uniform pixel values. But I haven& #39;t published that yet.

Read on Twitter

Read on Twitter