I am assessing power analyses in published papers (for reasons), and there are some quite common errors. Here follows a thread of these issues. The goal is not to make fun of folks, who I am sure are all trying to do the right thing, but to point out things to avoid doing. 1/11

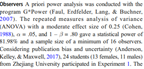

It’s not uncommon to report a power analysis for a one-sided test and then use two tailed tests. This will overestimate the power of your statistical tests. Please don’t do this  https://abs.twimg.com/emoji/v2/... draggable="false" alt="☺️" title="Smiling face" aria-label="Emoji: Smiling face">. 2/11

https://abs.twimg.com/emoji/v2/... draggable="false" alt="☺️" title="Smiling face" aria-label="Emoji: Smiling face">. 2/11

It& #39;s also not uncommon for people to report power analyses for a different analytic strategy than is used to analyse the data. If you’re planning your study using a power analysis, you should make sure you plan for the analysis you will conduct. 3/11

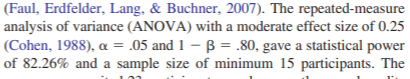

Often important parameters are not reported – e.g., people do not always state the effect size or goal power that they used in a power calculation. You should report all of the values used in a power calculation. 4/11

Equally, it’s probably better to directly state the effect size used in a power analysis rather than making people go to another paper to find it. 5/11

Sometimes people don’t state which type of effect size they used – without knowing which was used it’s hard to know whether the chosen value was reasonable (Cohen’s f was probably used here, but I only know that because I have hung out with G*Power a lot). 6/11

People often also just use Cohen’s effect size benchmarks for a power analysis, which seem extremely unlikely to be the best possible estimate of the effect size under study that you could develop. 7/11

People sometimes worry about ‘overpowered’ studies, but as long as the effect sizes are considered when interpreting results, this is not really a legitimate concern (barring wasting participants or your own time and resources, involving participants in risky research, etc.) 8/11

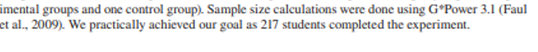

“we practically achieved our goal sample size” means "we did not achieve our goal"-to avoid ambiguous language you could report a sensitivity analysis to show that you almost reached your goal level of power at your effect size estimate despite the missing participants 9/11

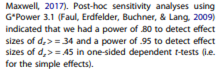

Post hoc power analyses using observed effect sizes to dismiss non-significant findings are also still quite common despite the circular reasoning, better to just report confidence intervals around parameter estimates. 10/11

Read on Twitter

Read on Twitter . 2/11" title="It’s not uncommon to report a power analysis for a one-sided test and then use two tailed tests. This will overestimate the power of your statistical tests. Please don’t do this https://abs.twimg.com/emoji/v2/... draggable="false" alt="☺️" title="Smiling face" aria-label="Emoji: Smiling face">. 2/11" class="img-responsive" style="max-width:100%;"/>

. 2/11" title="It’s not uncommon to report a power analysis for a one-sided test and then use two tailed tests. This will overestimate the power of your statistical tests. Please don’t do this https://abs.twimg.com/emoji/v2/... draggable="false" alt="☺️" title="Smiling face" aria-label="Emoji: Smiling face">. 2/11" class="img-responsive" style="max-width:100%;"/>